TL;DR – Use Data Pump.

How to take an export Data Pump from Oracle Autonomous Database (always free)?

–Saeed asks on my how to Import post

Data Pump can be invoked from many different places

Data Pump is a feature of the Oracle Database, and it allows you to either IMPORT or EXPORT data and objects to and from your Oracle Database. It writes to a proprietary format, so it’s great for taking logical backups or for moving data from one Oracle Database to another Oracle Database.

I’m not here to teach you how to use Data Pump. For that, I suggest the always-awesome Tim Hall.

Who’s Tim Hall? He’s the real person behind Oracle-Base. He writes those articles in his spare time. If I were the pope, he’s be Saint Tim, canonized in his own lifetime of course!

SOME ways to invoke Data Pump

- Data Pump command line utilities (expdp and impdp) – requires an Oracle Client install

- PL/SQL and DBMS_DATAPUMP (requires an Oracle Database Connection)

- GUIs and tools and such (SQL Developer has a Data Pump Wizard)

- REST APIs – requires Oracle REST Data Services

- Using the DP command in SQLcl

Some things you should know about Data Pump

- run right now, or scheduled to run later

- compress and/or encrypt your exports

- writes the resulting DMP files to the database server, to the database directory specified

- generates logs so you can see what’s happening with your job

- can take awhile to run depending on how much you’re asking it to do

- you might want to look at the parallel option

- beware the Time Zone Versions of your databases, they need to be compatible!

Basically, just go read the Docs or Tim’s articles before you start to get serious about using Data Pump. The worst thing would be for you to have an Export, only to find out when you need it, that it’s unusable!

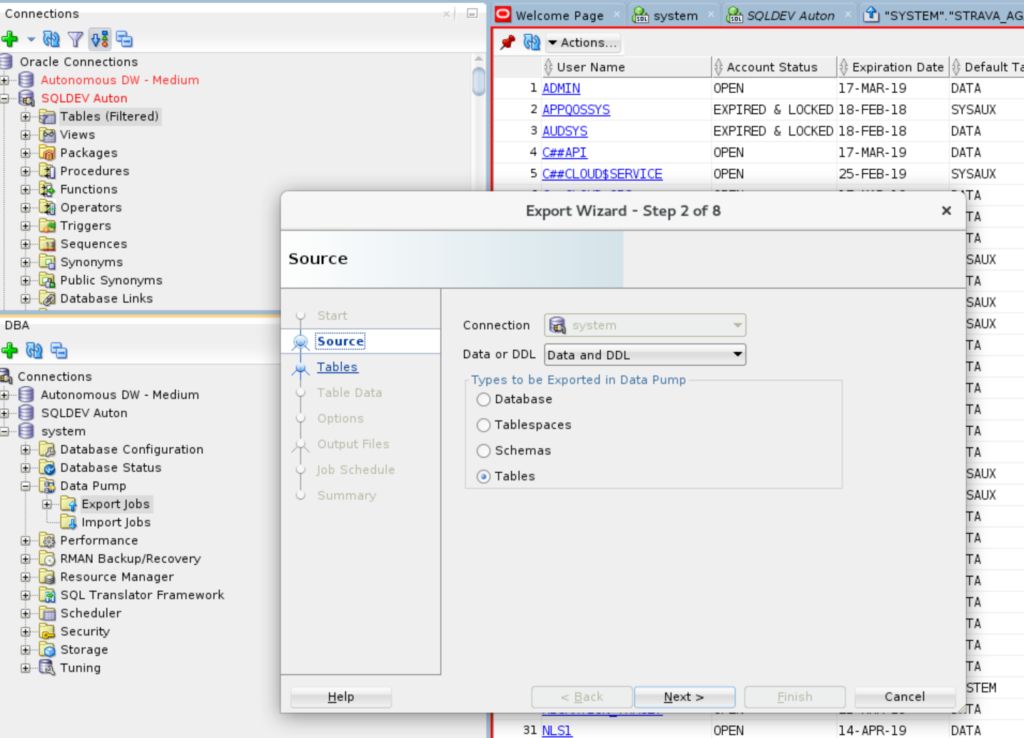

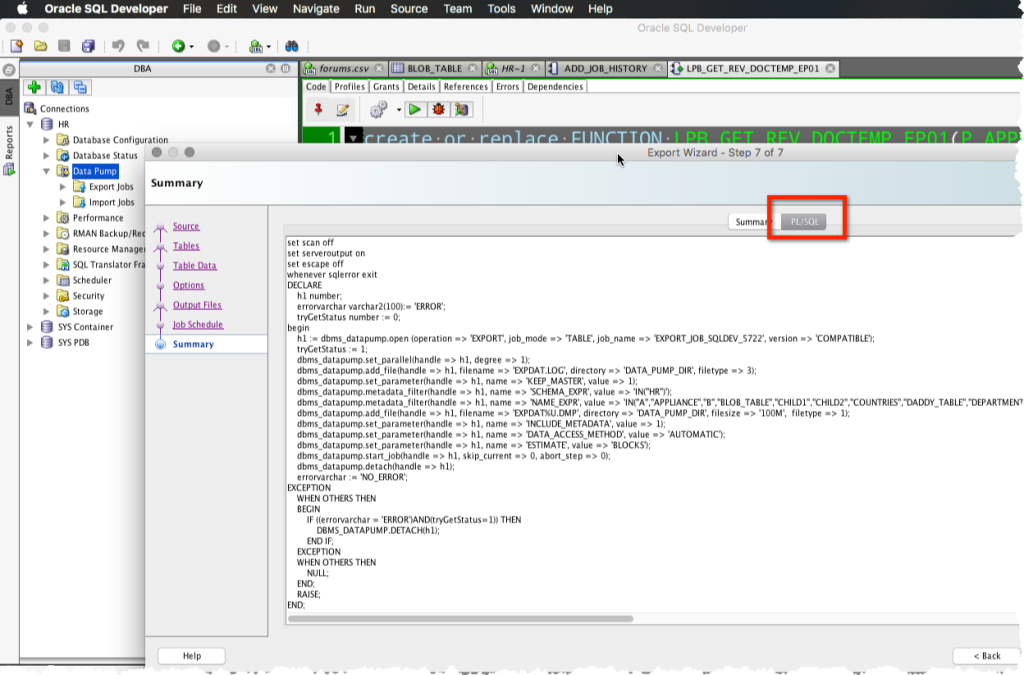

Using SQL Developer Desktop

I’ve already shown how to do this, but effectively you’ll need a connection to your Autonomous Database, then access the DBA Panel, and invoke the Data Pump Wizard.

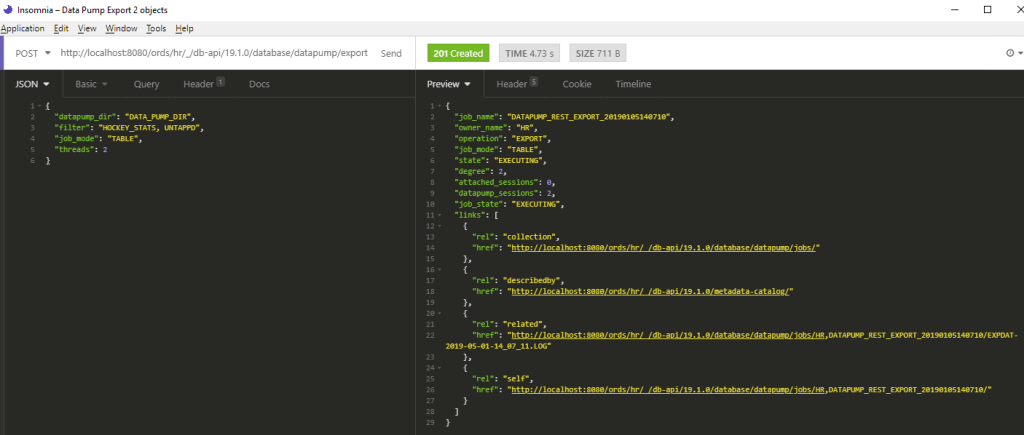

Using REST APIs

Oracle Autonomous Database comes with Oracle REST Data Services (ORDS) and all it’s features, out-of-the-box. That includes our Database REST APIs, which includes endpoints for invoking Data Pump!

I have a complete how-to-use-this post here.

This capability has been around since 2019, give or take. The nice thing about this is the response includes links to follow for monitoring the job via the Log(s).

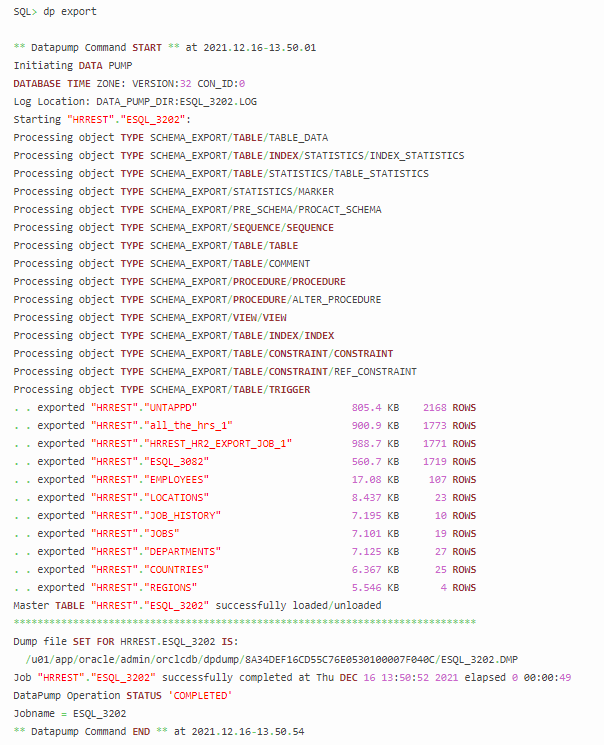

Using SQLcl and it’s new DataPump (DP) Command

Does it get any easier than simply running:

dp export

No, no it does not get any easier than that. Especially when you consider SQLcl is ready to go, out-of-the-box in OCI via CloudShell.

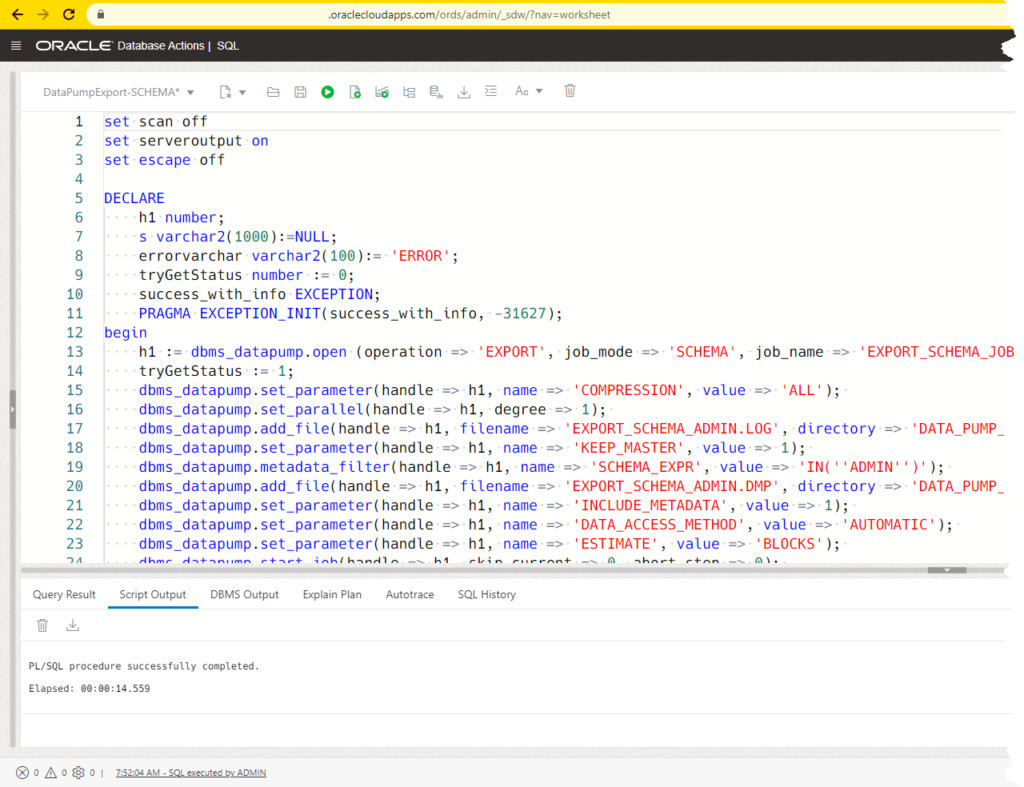

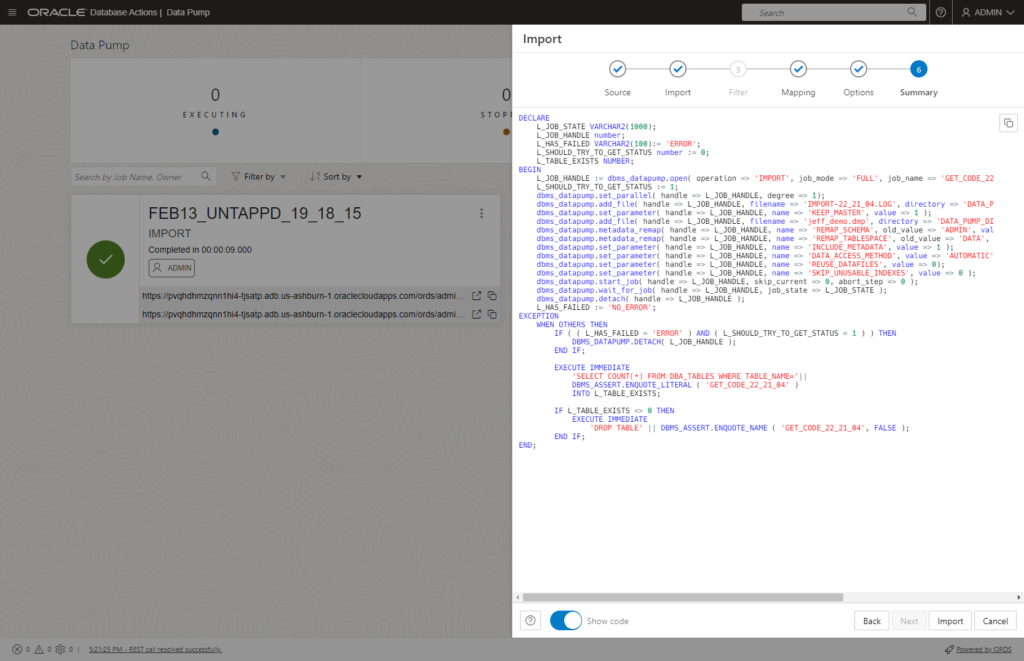

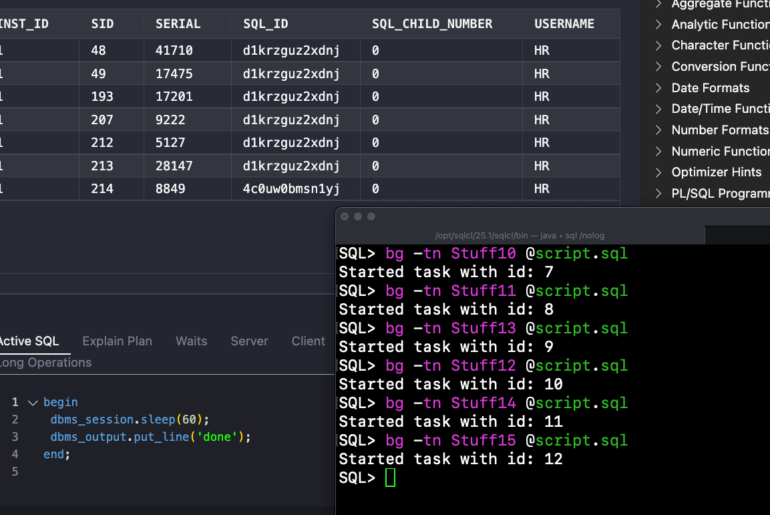

Via PL/SQL..in your SQL Developer Web Worksheet

Let’s say you want to export your ADMIN schema. Now, you shouldn’t be using your ADMIN schema for applications, but it’s often used for ad-hoc operations because it’s convenient. I have most of my demo and 101 scenarios built on ADMIN.

If I want to export those, I can simply run this code.

SET scan off SET serveroutput ON SET escape off DECLARE h1 NUMBER; s VARCHAR2(1000):=NULL; errorvarchar VARCHAR2(100):= 'ERROR'; tryGetStatus NUMBER := 0; success_with_info EXCEPTION; PRAGMA EXCEPTION_INIT(success_with_info, -31627); BEGIN h1 := dbms_datapump.OPEN (operation => 'EXPORT', job_mode => 'SCHEMA', job_name => 'EXPORT_SCHEMA_JOB', version => 'COMPATIBLE'); tryGetStatus := 1; dbms_datapump.set_parameter(handle => h1, name => 'COMPRESSION', VALUE => 'ALL'); dbms_datapump.set_parallel(handle => h1, degree => 1); dbms_datapump.add_file(handle => h1, filename => 'EXPORT_SCHEMA_ADMIN.LOG', directory => 'DATA_PUMP_DIR', filetype => 3); dbms_datapump.set_parameter(handle => h1, name => 'KEEP_MASTER', VALUE => 1); dbms_datapump.metadata_filter(handle => h1, name => 'SCHEMA_EXPR', VALUE => 'IN(''ADMIN'')'); dbms_datapump.add_file(handle => h1, filename => 'EXPORT_SCHEMA_ADMIN.DMP', directory => 'DATA_PUMP_DIR', filesize => '500M', filetype => 1); dbms_datapump.set_parameter(handle => h1, name => 'INCLUDE_METADATA', VALUE => 1); dbms_datapump.set_parameter(handle => h1, name => 'DATA_ACCESS_METHOD', VALUE => 'AUTOMATIC'); dbms_datapump.set_parameter(handle => h1, name => 'ESTIMATE', VALUE => 'BLOCKS'); dbms_datapump.start_job(handle => h1, skip_current => 0, abort_step => 0); dbms_datapump.detach(handle => h1); errorvarchar := 'NO_ERROR'; EXCEPTION WHEN OTHERS THEN BEGIN IF ((errorvarchar = 'ERROR')AND(tryGetStatus=1)) THEN DBMS_DATAPUMP.DETACH(h1); END IF; EXCEPTION WHEN OTHERS THEN NULL; END; RAISE; END; /

Please actually read this code before you use it. Consider encrypting the data pump output, especially if you have any REAL data in it.

Once it’s in your worksheet, just hit the ‘Run’ button.

Tip: You can use the SQL Developer Desktop Wizard to generate this DDL for you.

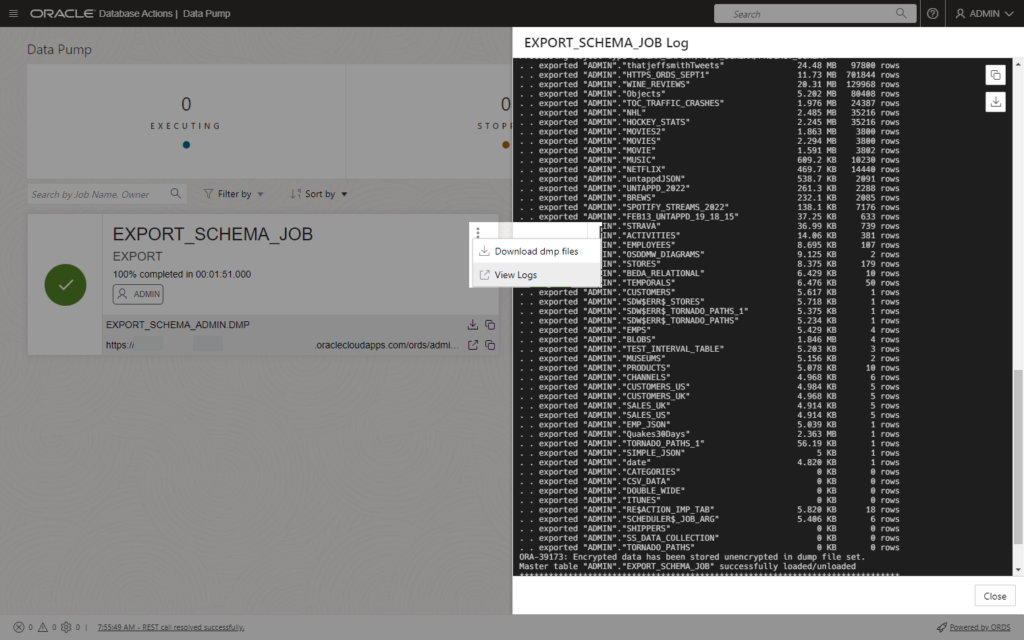

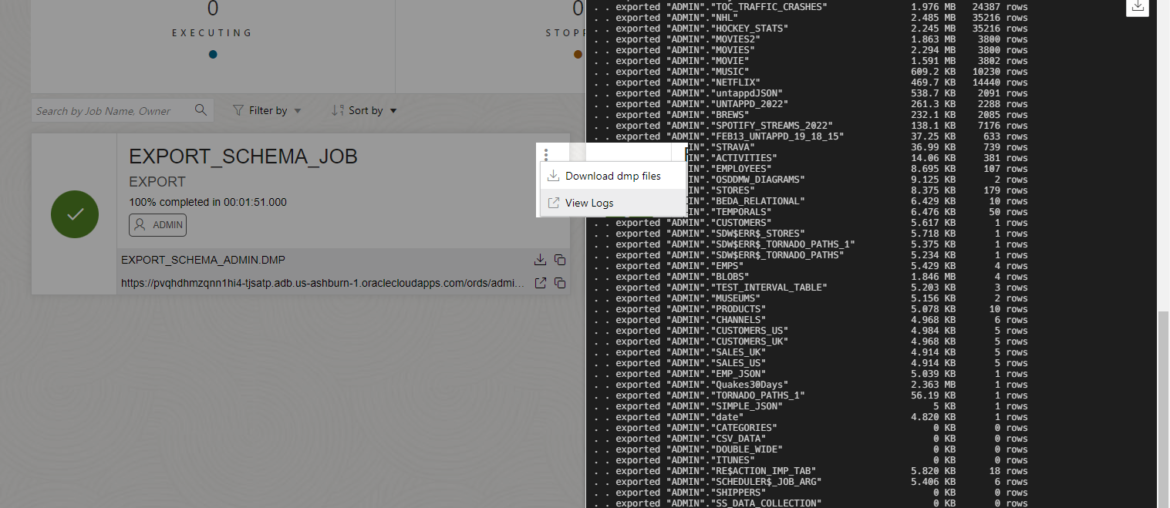

Note that the pl/sql script takes 14’ish seconds to create the job. That’s not how long it takes to actually run the job. That took 1 minute and 51 seconds. Which I know from reading the Log.

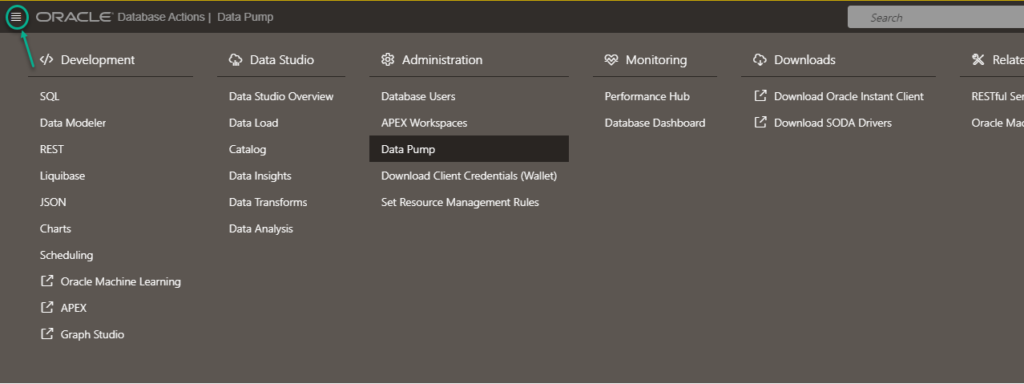

Follow along in the Data Pump page

You can toggle over to the Data Pump page by access the SQL Developer Web Mega Menu, accessible via the Hamburger button in the upper left hand corner.

I set the job name via ‘job_name => ‘EXPORT_SCHEMA_JOB,’ so that’s what I’m looking for here.

Here I can see all of my data has been exported, woohoo!

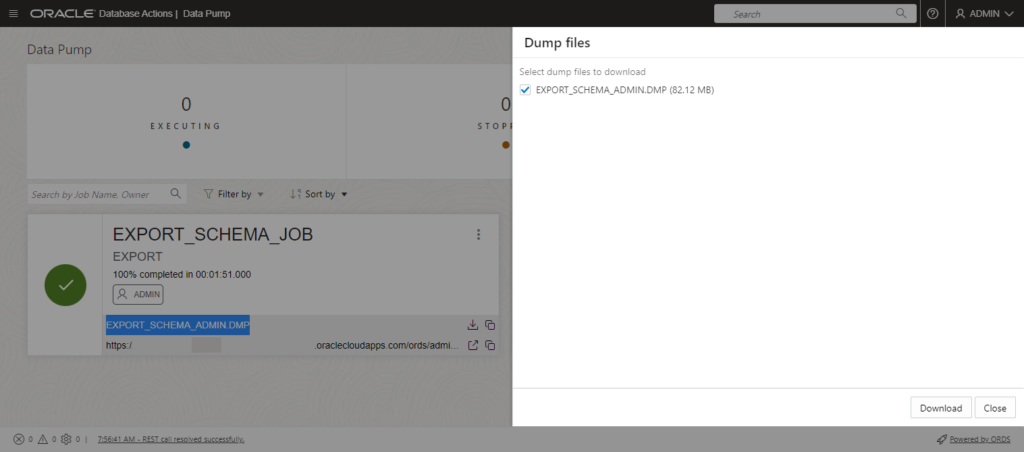

I can also download the DMP file(s).

Why don’t you have an EXPORT Wizard for me to use?

Because we decided to build the IMPORT wizard first. The EXPORT Wizard will show up later this year, stay tuned!

7 Comments

Great post and valuable input from Joe … prompted me to add parameter “reusefile=>1” to the dbms_datapump.add_file calls since I’m using this to take daily backups of my “always free” schema which I then upload to a private Github repository.

Saw comment on OCI recently that always free resources that are idle for 7 days or more will start to be reclaimed – it behoves those of us using always free to ensure we have a robust backup/restore process in place. Github only works as the offsite destination when file sizes are relatively small (<50MB).

You could also keep them on the object store, that’s not going to get reclaimed/destroyed as a resource because you haven’t used your database often enough.

This is a good summary of exp options. Two cautions when using OCI Always-Free: 1.) Export to DATA_PUMP_DIR permanently consumes an equal amount of 20GB free tier allocated DB space for DATA_PUMP_DIR use even if dmp file is deleted after download because space does not shrink after use. Might not seem like a lot, but need to consider 20GB includes not just application data but also database system data tables such as APEX schema and DB tables as well. 2.) Exporting to Object Storage may be a better option to not consume DB resources, but that results in the dmp file being split into segments, which can be a pain to download on a windows platform (CLI command based re-assembly of the segments does not work on windows per Oracle support and testing). Segments need to be downloaded individually and then re-assembled on local PC. Export to object storage does make it easier to import into other OCI DB instances than if DATA_PUMP_DIR

Hope this helps

Joe

Yes, yes, yes to all points. I just wanted the easiest path for folks to follow.

We’re enhancing the data pump wizards to allow for the dmp files to be deleted from the database directories later this year.

Thank you for the prompt execution.

I’ve run the export script in SQL WEB & SQL Developer and got the error.

Tip : Oracle Database Autonomous 21c

ORA-39002: invalid operation

ORA-06512: at line 33

ORA-06512: at “SYS.DBMS_SYS_ERROR”, line 79

ORA-06512: at “SYS.DBMS_DATAPUMP”, line 4929

ORA-06512: at “SYS.DBMS_DATAPUMP”, line 5180

ORA-06512: at line 13

39002. 00000 – “invalid operation”

*Cause: The current API cannot be executed because of inconsistencies

between the API and the current definition of the job.

Subsequent messages supplied by DBMS_DATAPUMP.GET_STATUS

will further describe the error.

*Action: Modify the API call to be consistent with the current job or

redefine the job in a manner that will support the specified API.

I’ve ran this in both my 19c and 21c instances with no problems. I’m supplying this PL/SQL code block as an example, it may need amending to work for you and your environment(s).

Improvement in the wizard is good to hear. It would be REALLY great if there was an option when exporting to object storage to not have the dmp file split into segments or be able to set the size of the segments to reduce or increase the number of them. The segments are a giant pain in the neck if you want to download them locally on a windows machine.