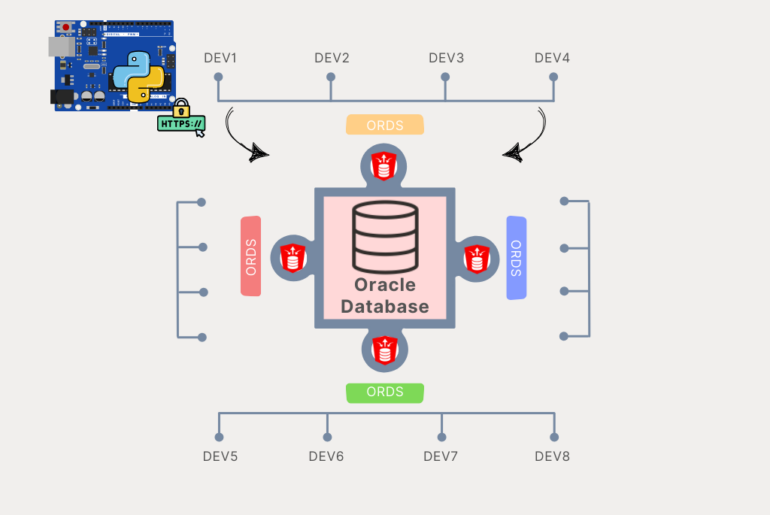

With version 19.1 of Oracle REST Data Services (ORDS), you now have the option of enabling a Database Management REST API. I talked about that last week, and a TON of you clicked into that story, thanks!

While it’s both fun and easy to demo GETs and imagine the reports you could build with the output, today I wanted to demonstrate something a bit more involved.

Have you ever needed to Data Pump EXPORT some or all of your database? Well now you can do that with a REST call via ORDS.

I want to export two tables, my UNTAPPD and HOCKEY_STATS tables. Should these tables be in a Human Resources schema? No. Are they there anyway? Yes 🙂

So let’s get started.

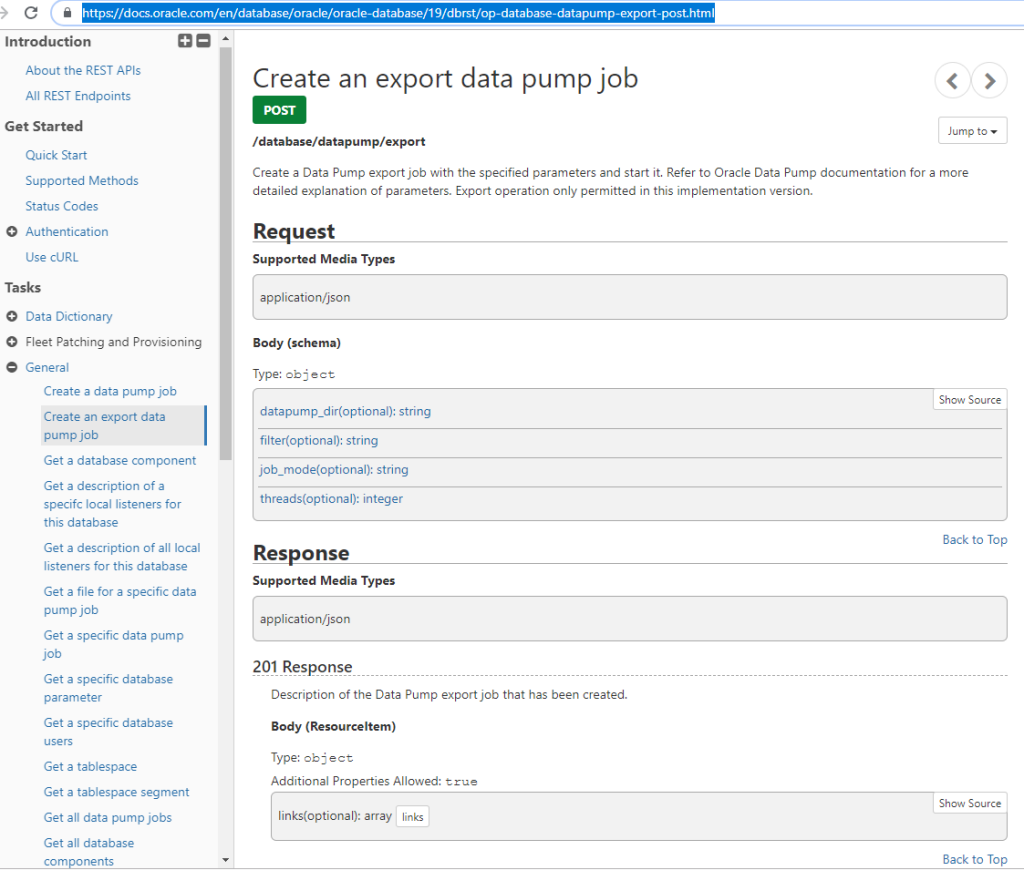

First things first, let’s find the doc for the Data Pump feature.

Found it!

Our POST to /database/datapump/export needs 4 JSON doc attributes. Where are we pumping the output to, what kind of export are we doing, what exactly are we exporting, and how many threads do we want to spin up for the job?

Now, if you know the Data Pump PL/SQL API, you’re aware that there are a TON more configuration widgets you can configure for creating Data Pump jobs. For a more complete Data Pump REST API, the Data Pump team will be working with us to deliver that later this insert vague time period here.

But for now, let’s show how easy it is to export my two tables.

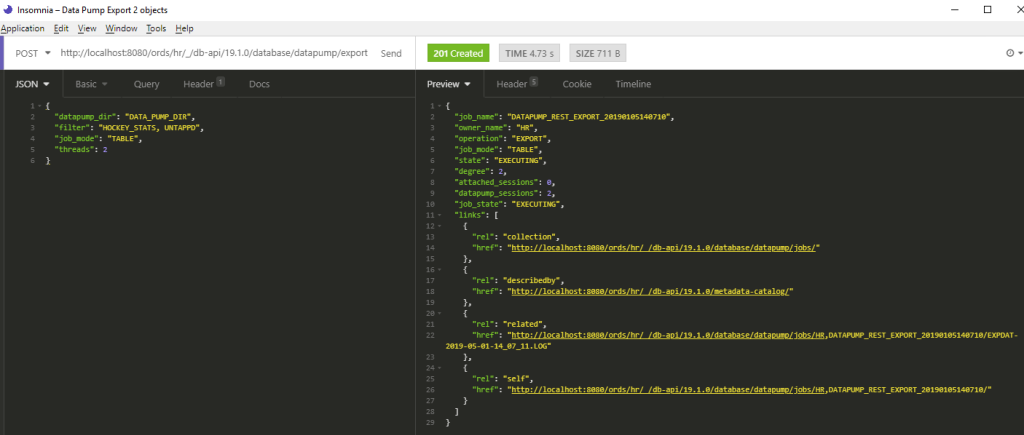

I’m going to POST to

http://localhost:8080/ords/hr/_/db-api/19.1.0/database/datapump/export

The HR in the URI tells you who will own the Export JOB.

Ok, so we know the verb and the address for the request. Now we need to construct the POST Body.

{

"datapump_dir": "DATA_PUMP_DIR",

"filter": "HOCKEY_STATS, UNTAPPD",

"job_mode": "TABLE",

"threads": 2

}The only tricksy part here is the “filter” items. We tried to simplify the request as much as possible. “job_mode” is set to “TABLE”, so for “filter” we need a comma delimited list of tables.

Let’s make the request!

The Job is created!

Below is the ‘raw’ response, but we’re told a few key things:

- the name of the job

- who owns it

- the type of export

- it’s state – at the time of the response it was ‘EXECUTING’

- how many sessions – per the thread count

- and a bunch of links

{

"job_name": "DATAPUMP_REST_EXPORT_20190105140710",

"owner_name": "HR",

"operation": "EXPORT",

"job_mode": "TABLE",

"state": "EXECUTING",

"degree": 2,

"attached_sessions": 0,

"datapump_sessions": 2,

"job_state": "EXECUTING",

"links": [

{

"rel": "collection",

"href": "http://localhost:8080/ords/hr/_/db-api/19.1.0/database/datapump/jobs/"

},

{

"rel": "describedby",

"href": "http://localhost:8080/ords/hr/_/db-api/19.1.0/metadata-catalog/"

},

{

"rel": "related",

"href": "http://localhost:8080/ords/hr/_/db-api/19.1.0/database/datapump/jobs/HR,DATAPUMP_REST_EXPORT_20190105140710/EXPDAT-2019-05-01-14_07_11.LOG"

},

{

"rel": "self",

"href": "http://localhost:8080/ords/hr/_/db-api/19.1.0/database/datapump/jobs/HR,DATAPUMP_REST_EXPORT_20190105140710/"

}

]

}The Log

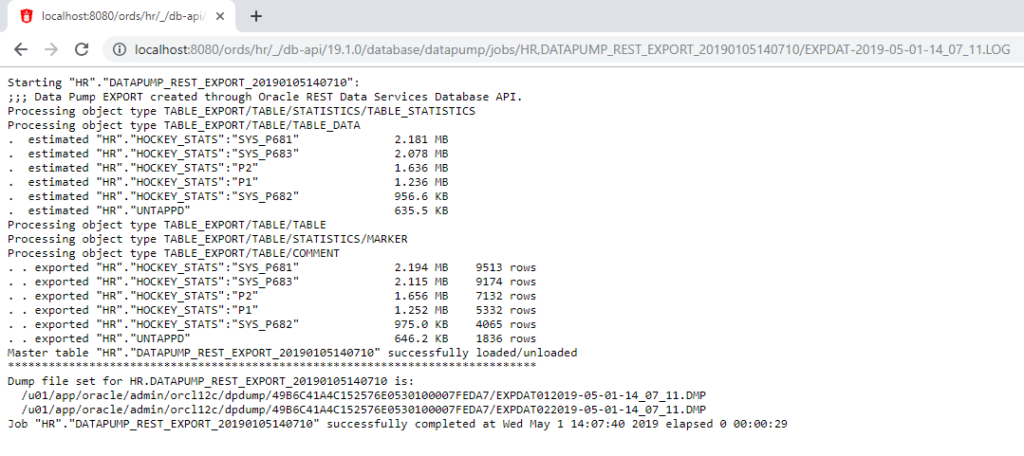

The first thing I check after kicking a job off is the log so I can see what’s happening. When I’m in SQL Developer, I go open the job and the tool shows it to me. But this is HTTP, so surely there’s a better way.

The response above had links for the log and job.

So let’s navigate to the Log:

Good news – my job finished, successfully! If you’re wondering why there’s more than 2 entries for the exported and rows, it’s because my HOCKEY_STATS table is partitioned.

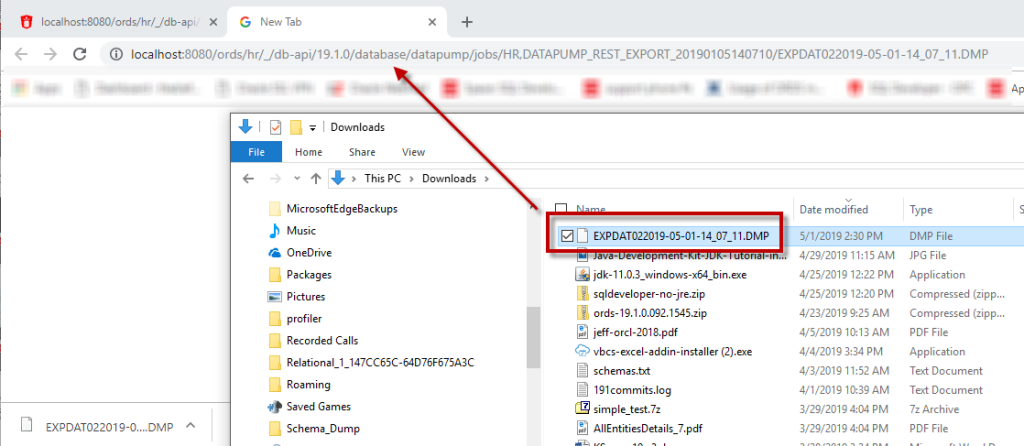

I want the DMP file too…

Just follow the links. If we open the JOB link itself, one of the associated links for that object is the output file, the DMP.

And if I click THAT link, ORDS will download it for me.

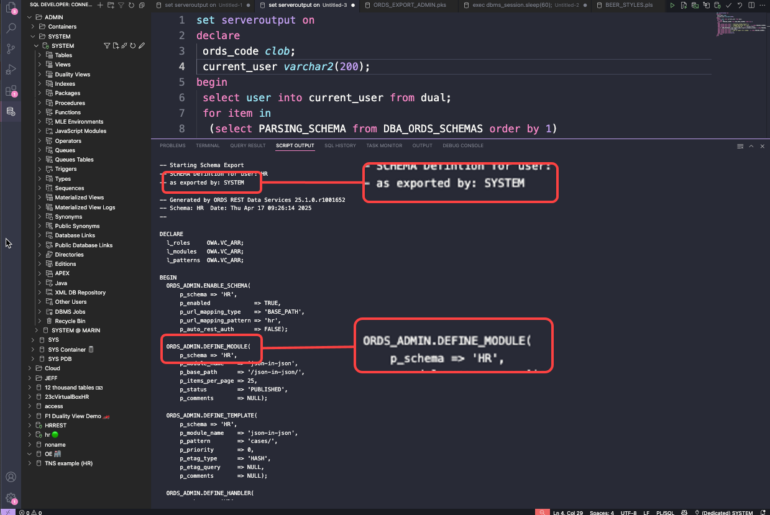

Jeff! I want some slides so I can show this off to my friends and family!

Ok, but only because you asked nicely.