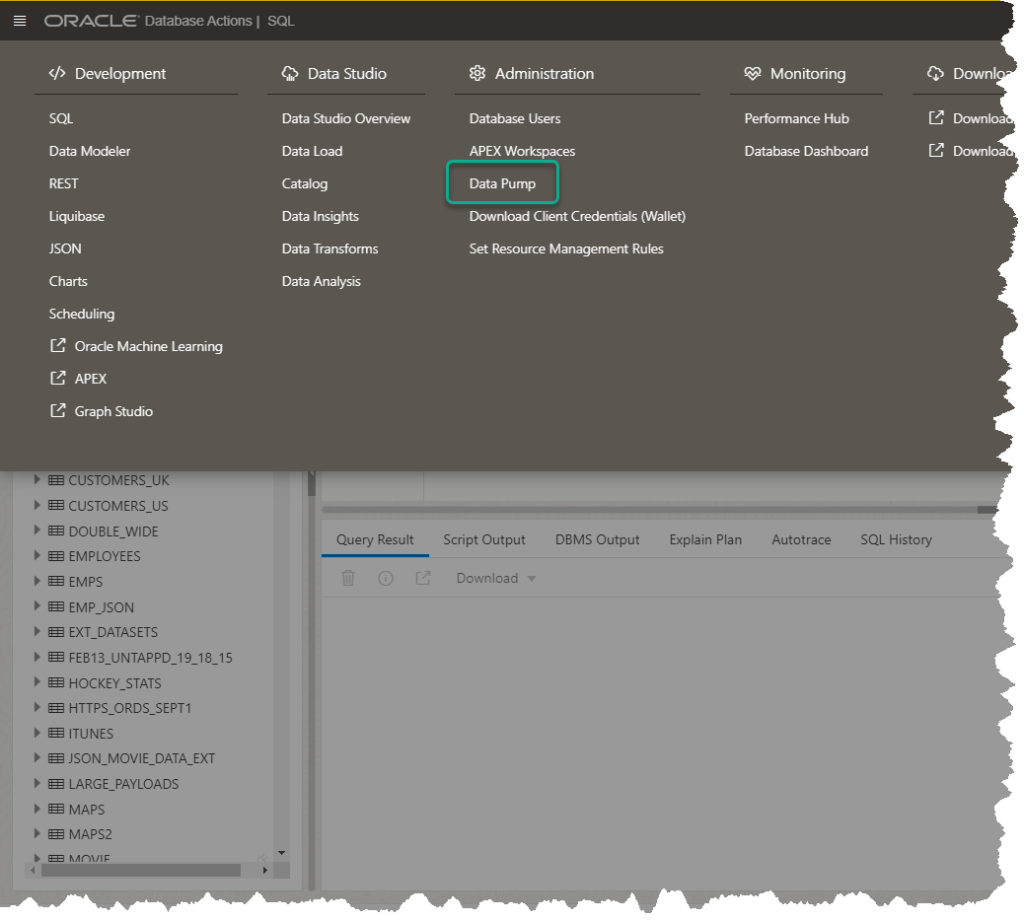

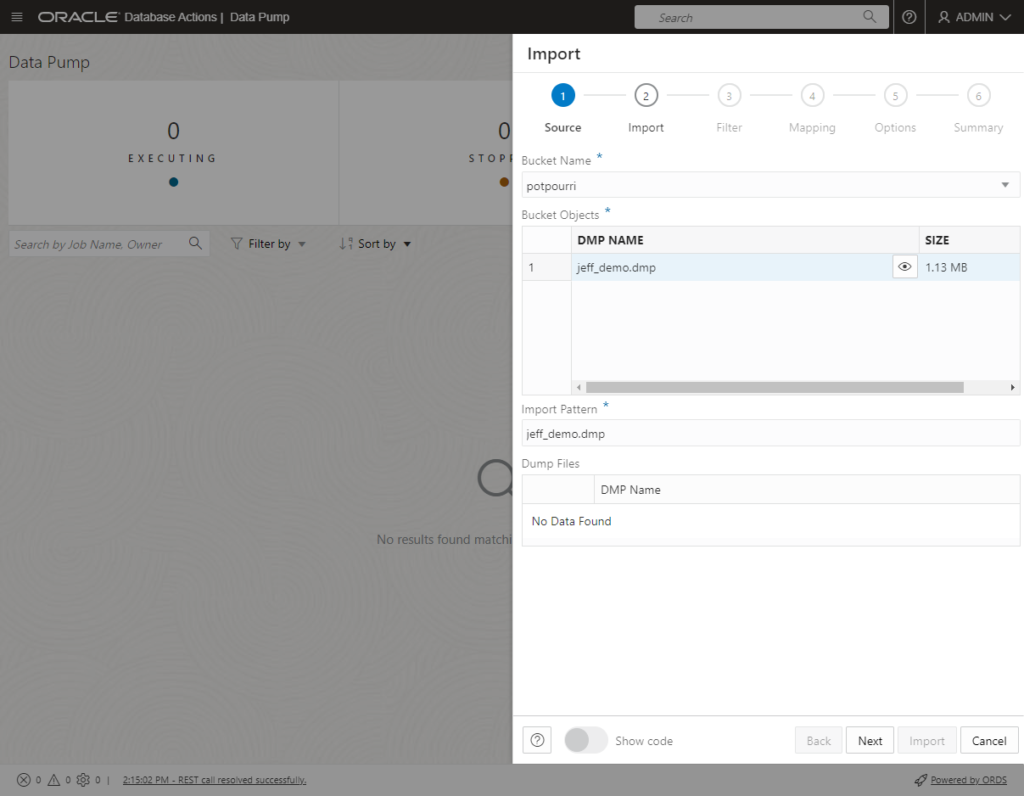

We now have an IMPORT Wizard that allows you to browse your OCI Object Store buckets for DMP files to be used for a Data Pump IMPORT Job.

Warning: this post has many, many pictures.

This takes you to a screen listing your previous jobs, where you can access both the logs and resulting DMP files. This feature has been around for awhile now, and I take you through it all here.

What’s new is this –

Don’t click that button, YET.

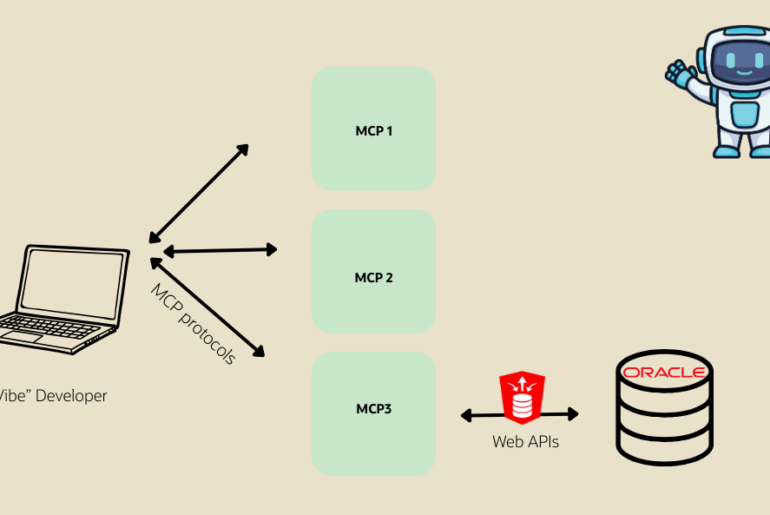

Let’s talk about how this works

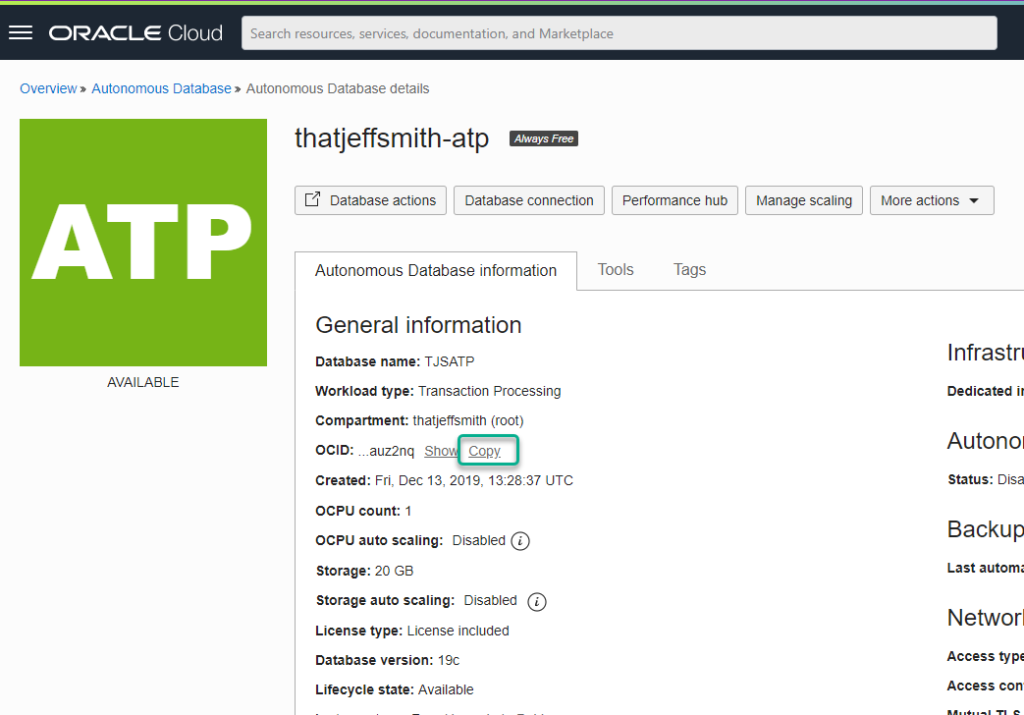

The universe we’re operating in is Oracle Cloud Infrastructure (OCI.) And in this cloud, we have a database, an Autonomous Database. These “resources” are assigned IDs, they’re called OCIDs.

I can take one or more of these resources, and assign them to a Dynamic Group. The power of these groups lies in the ability to grant their members super-powers via policies.

Policies define exactly what super powers our group members will inherit. But in the real, or cloud world, they really specify what things in our cloud tenancy can be accessed, and the actions that can be applied to said things.

Cut the abstractions already!

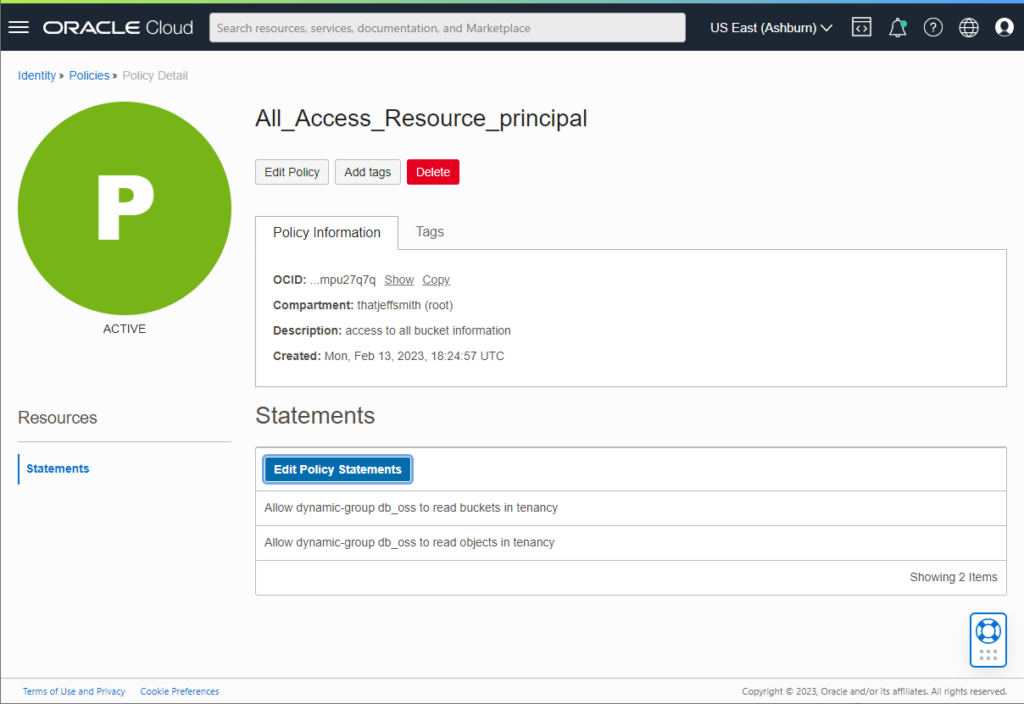

Ok, we’re going to tell OCI, that our Autonomous Database is allowed to browse and access objects (files) in the Oracle Object Store via these two policies:

- Allow group groupXYZ to read buckets in tenancy

- Allow group groupXYZ to read objects in tenancy

This ability for the database itself to authenticate and get access to OCI resources is what’s known as a resource principal.

SQL Developer Web will then have the Database (via DBMS_CLOUD PL/SQL API) itself browse your object store, grab a DMP file, read it to see what’s in it, and then create IMPORT jobs with it.

Savvy?

No? Well you can read the full docs here.

The Prerequisites, step by step.

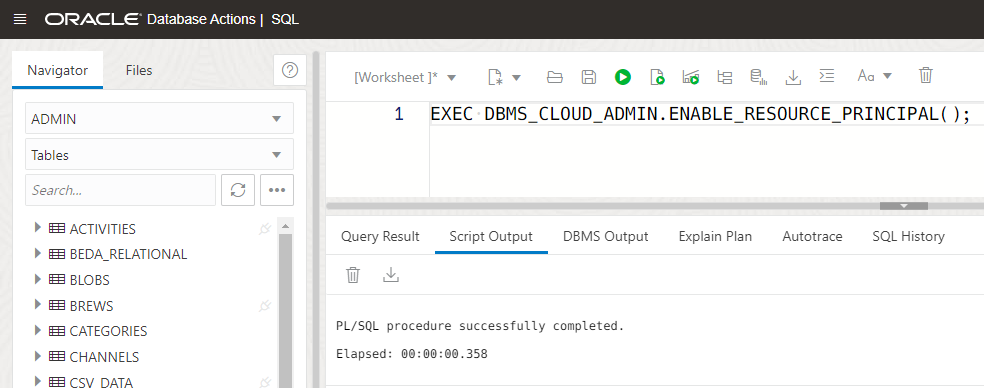

0. Enable resource principles for your database, must be done as ADMIN.

EXEC DBMS_CLOUD_ADMIN.ENABLE_RESOURCE_PRINCIPAL();

Having resource principles isn't enough, we have to setup the database such that it can take advantage of those. The nice thing is you can also quickly disable this at the database level if you want to lock things down from inside the database.

1. Find the OCID for your database, copy it

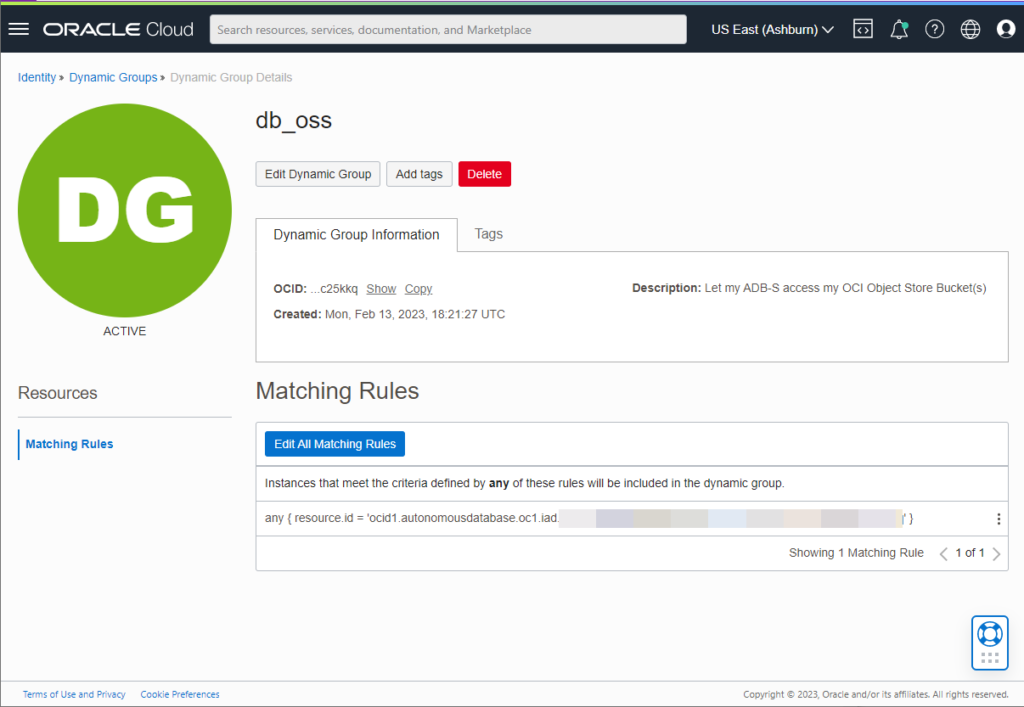

2. Create a dynamic group

You're going to add a rule, 'any resource.id = ', and splat in the OCID or OCIDs of your database.

3. Create a policy

Now that the dynamic group identifies our hero, we need to give them their powers.

If you just type 'bucket' into the search, it'll find these two items, make sure you add them both.

And that's it, the end of our 'homework.' If you ever decide you want to disable our feature about to be discussed, all you need to do is either remove the database from the dynamic group, or remove these policy statements from the policy. Or even, just delete the policy.

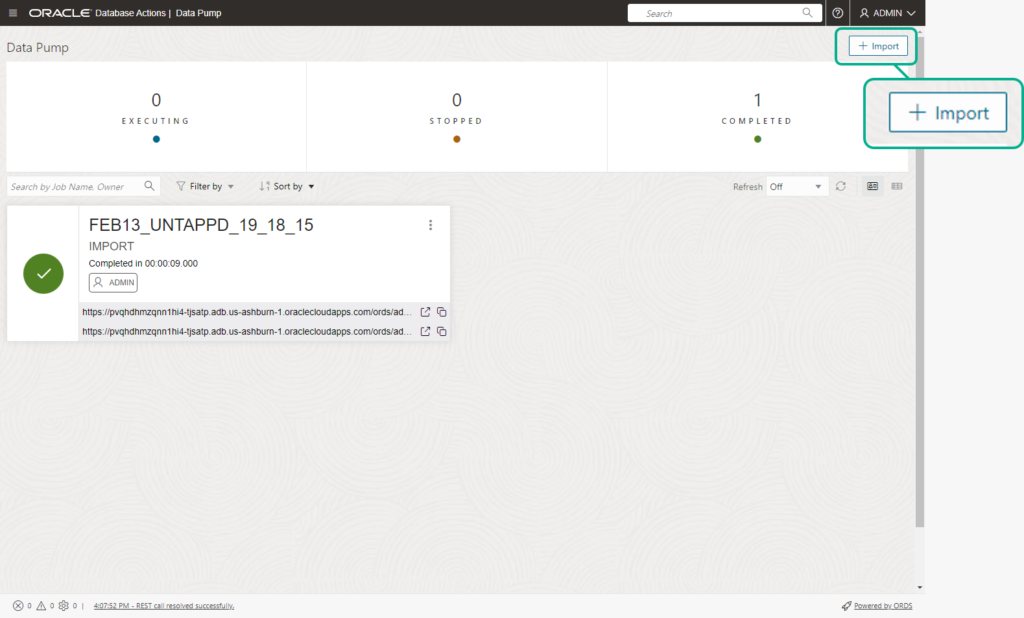

It's time to press the button!

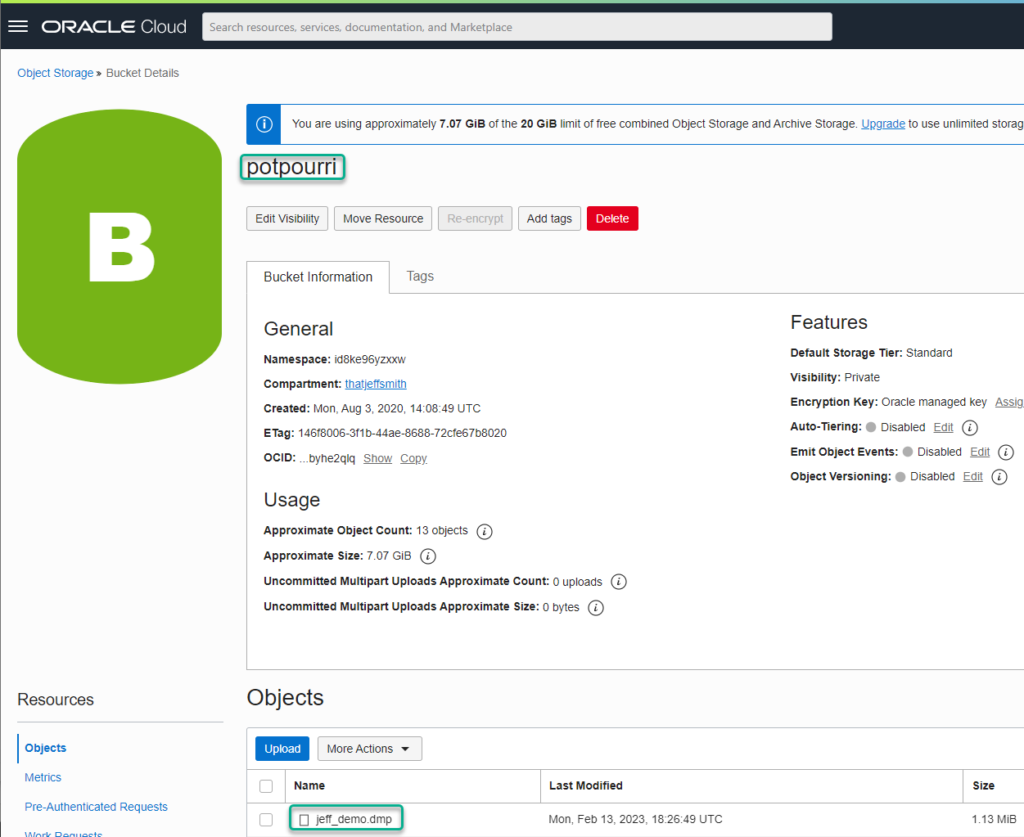

Except, I'm getting ahead of myself. Before we can do an IMPORT, we need a DMP file from a previous EXPORT. AND we need that DMP file put into an Object Store Bucket.

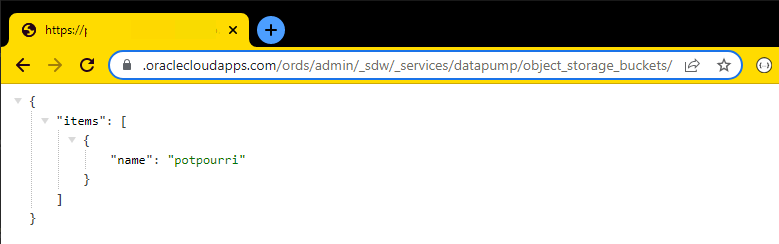

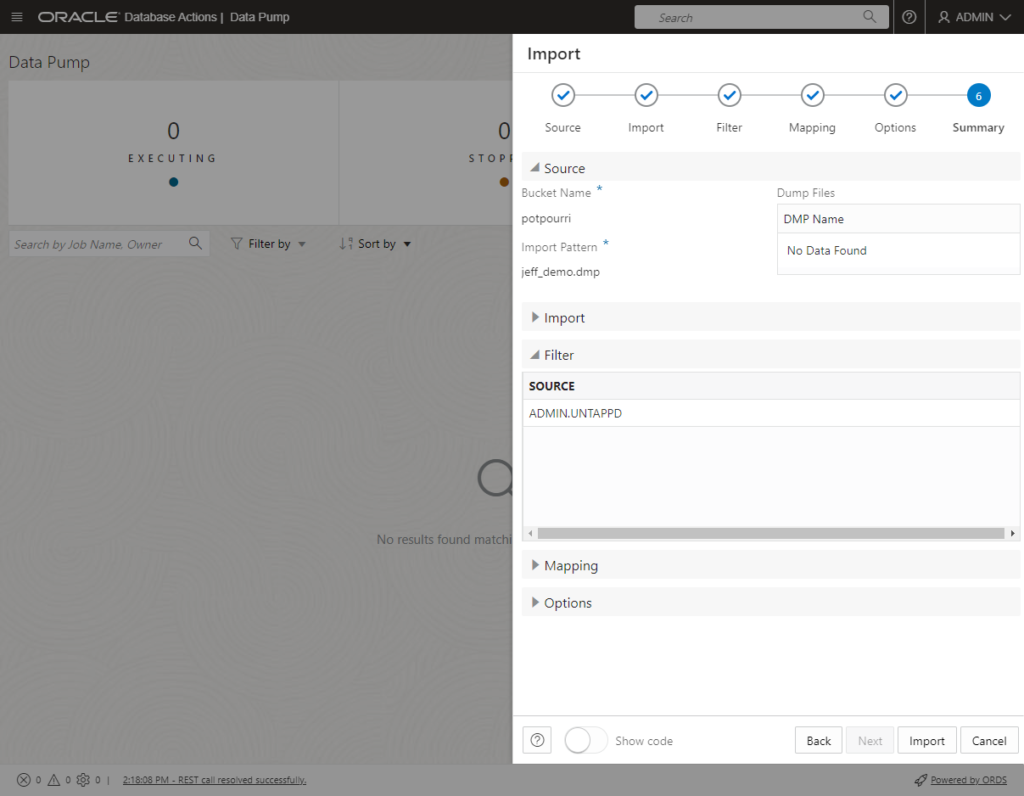

I'm going to be looking for 'jeff_demo.dmp' in a bucket named, 'potpourri.'

Now, now we can click the IMPORT button!

I only have one bucket, and I only have 1 'dmp' file to select. If I had more, I could navigate and select files as necessary.

If you do NOT see your bucket, it means our resource principal access from the database to the object store is having problems.

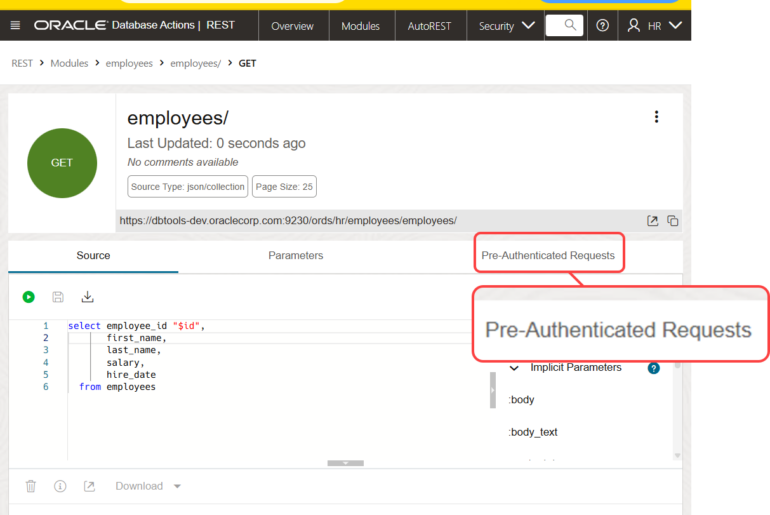

We're a web app, if you open your browser's dev tools, you can see the _sdw/ web call that initiates the requests for buckets.

I've heard from a user already there may be a problem if you have to navigate multiple levels of compartments to find your bucket, the SQL Developer wizard may not work. I'm chasing that bug down now, but the top of levels of buckets should be just fine.

Walking the wizard

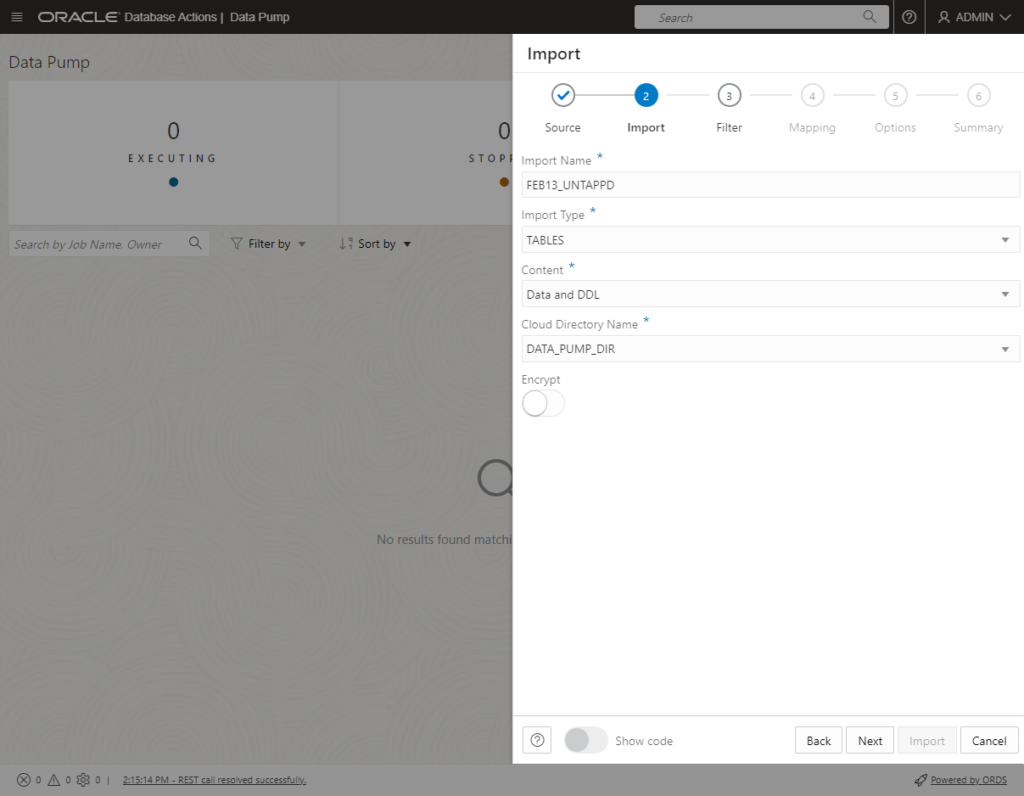

If you've used Data Pump before, especially in desktop SQL Developer, then our wizard will be pretty familiar. After you pick your file, we're going to ask you:

- what kind of import you want to run

- what schemas/objects you want to import

- if you want the data, the ddl, or both imported

- if the DMP file is encrypted, what's the encryption key

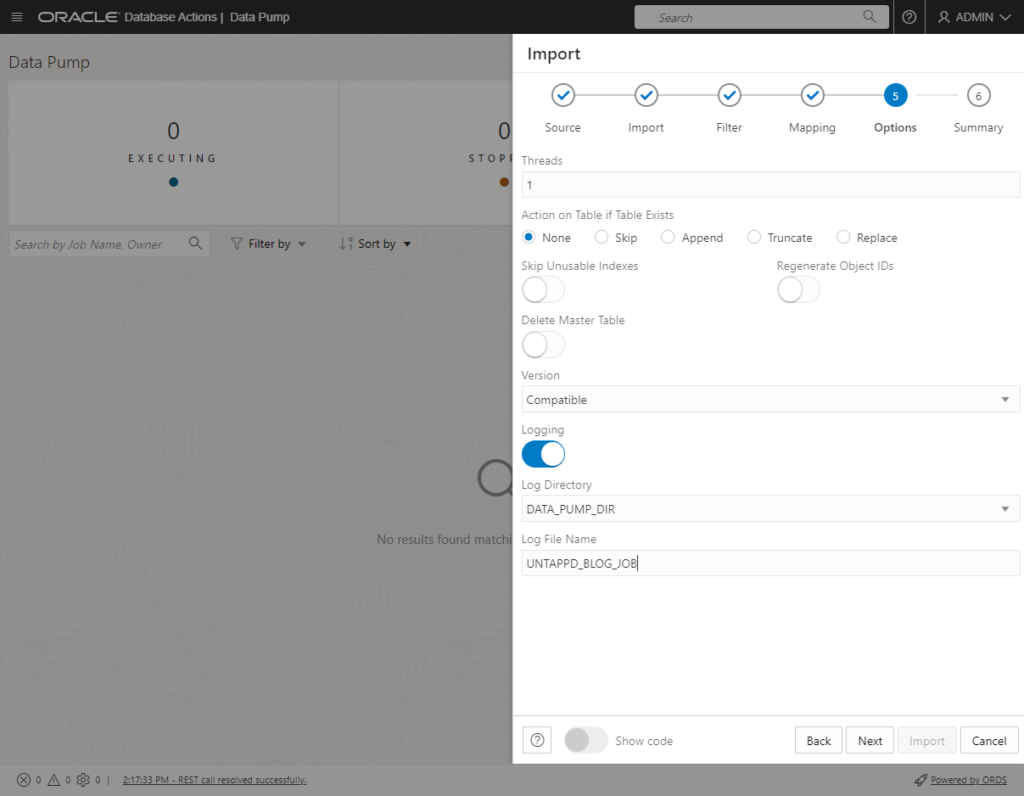

- how you want the job/log named

- remapping for both schemas and tablespaces

- review the summary screen and click 'Import'

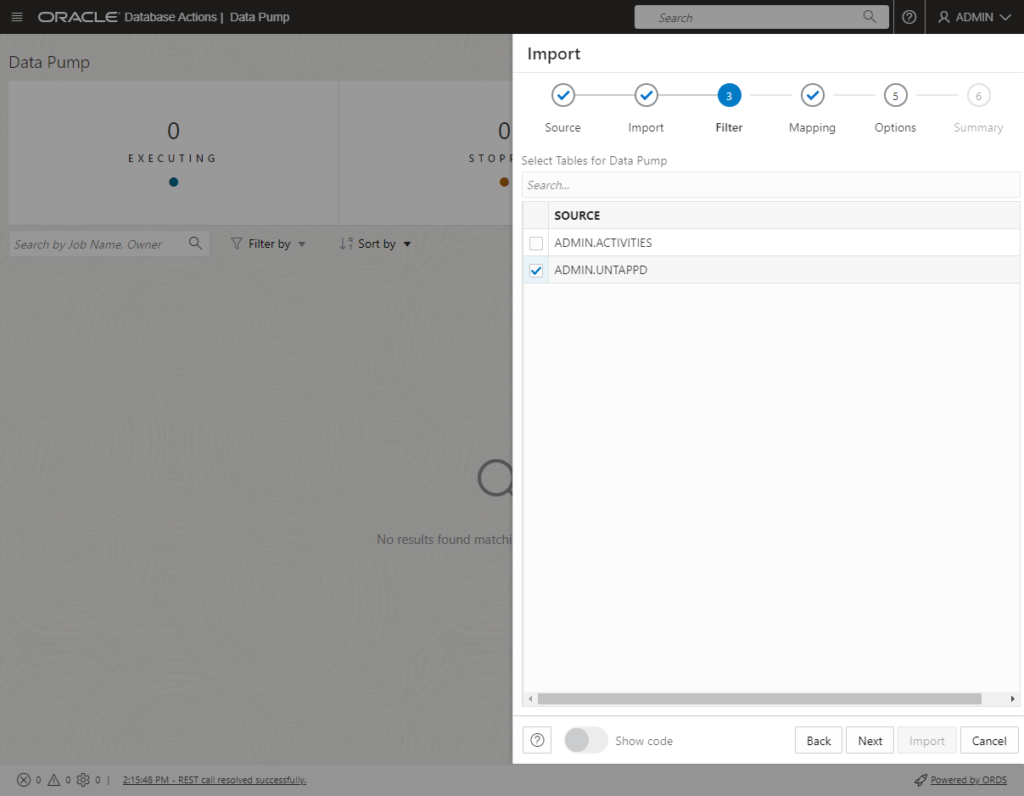

We need to do this so we can do data pump operations on it to get a list of schemas, tables in there that you can select from in the following screens.

We're copying the file and running a dummy job against it before we can populate this screen, remember?

See the Data Pump docs if you have questions.

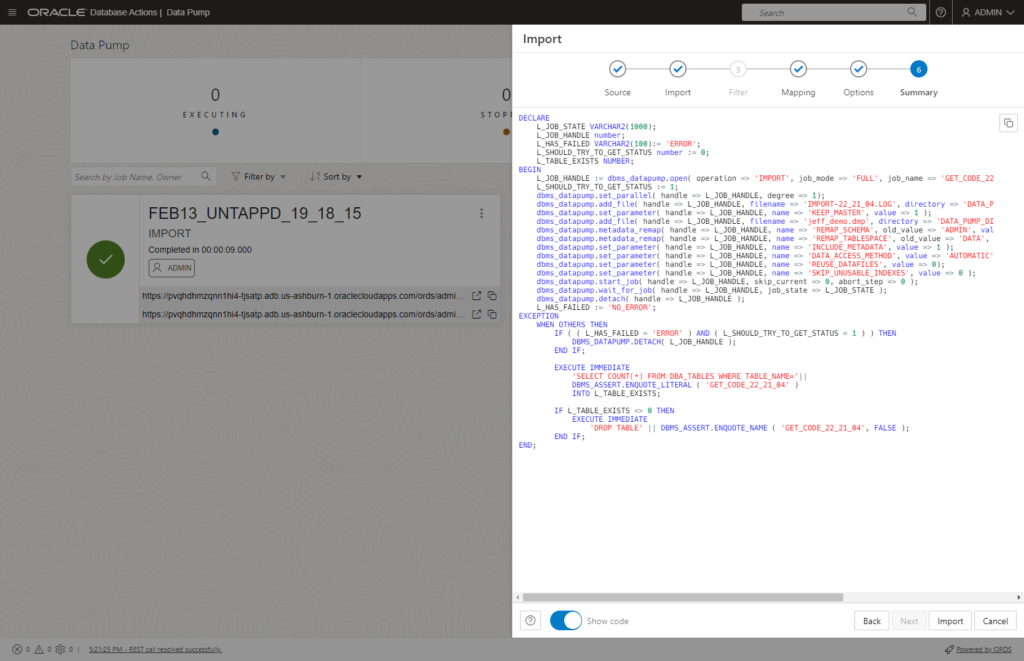

Or don't. Maybe you just want 'the code.'

See the 'Show code' toggle at the bottom? I can take the PL/SQL block, and tweak it, any which way I want, and run it in a SQL worksheet. I've been doing this for months, years to both export and import from my database. Remember, these Wizards just make things easier, they're not really magic.

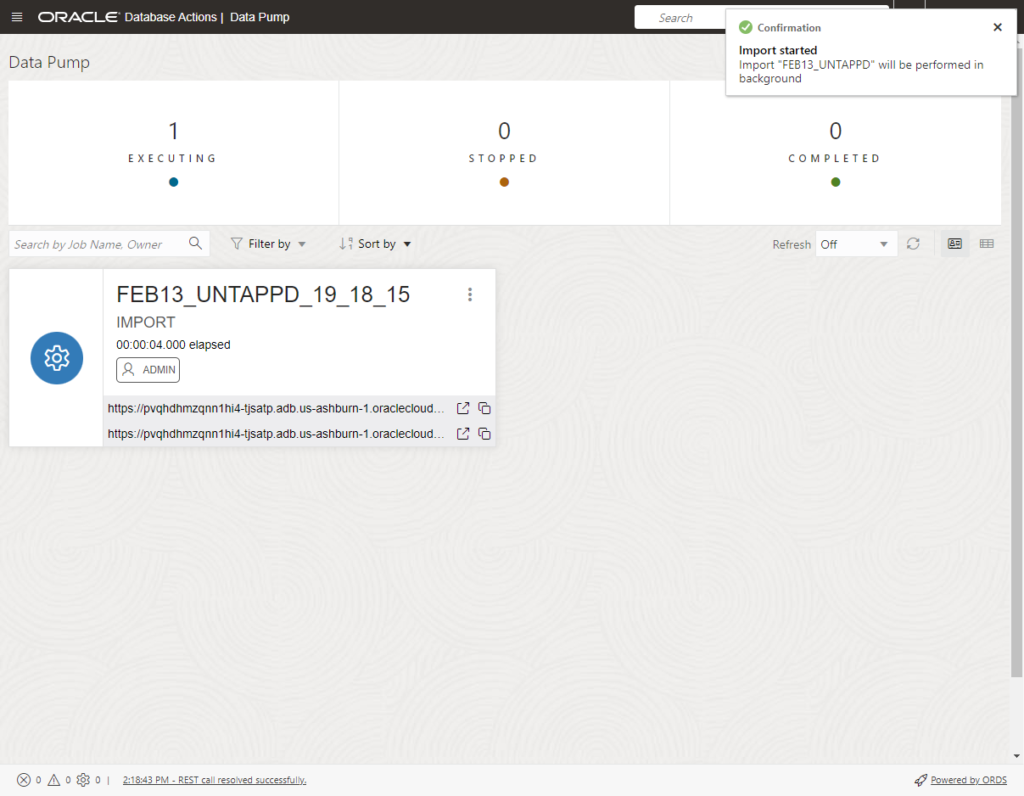

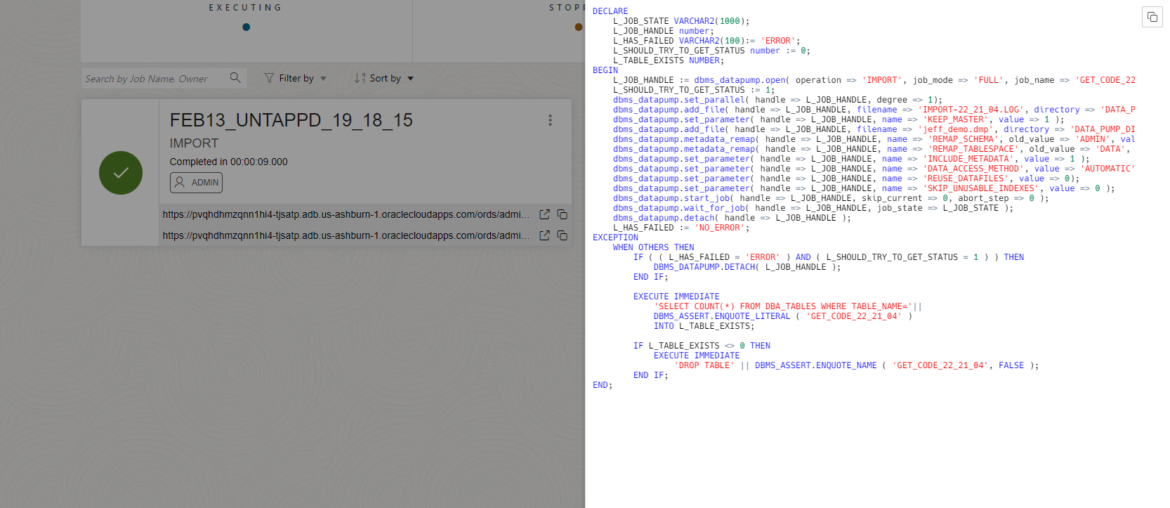

Ok, I clicked the 'Import' button

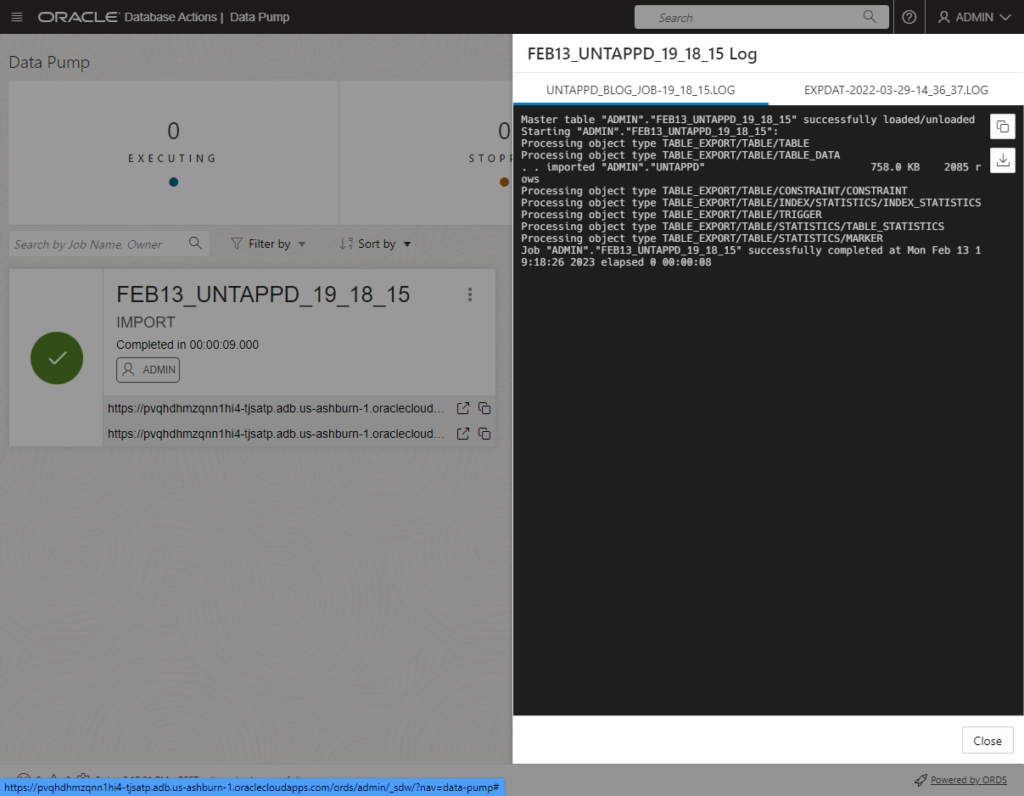

I can turn on the Refresh, and wait for the blue gear to turn into a green checkmark, OR I can open the job log panel and actively watch the log get populated. But my job ran pretty quick, so I'm basically just reading what already happened.

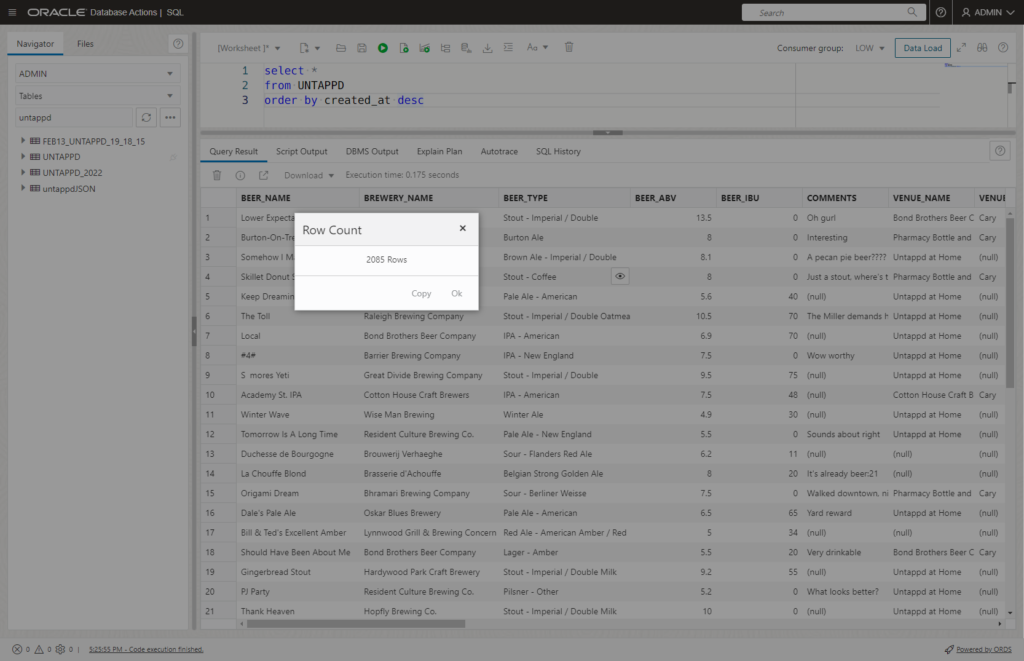

2,805 rows were processed for my UNTAPPD table. Let's go take a look!

Is that it?

Mostly. Things to discuss further:

- what about exports? - We're building that Wizard, next.

- what about on-premises? - Our wizard already supports reading files from your database directories, I'll show that if enough people ask

6 Comments

Sadly this fails at the import botton phase for me as you mention there may be a bug.

Does this have a ticket so we can see when it is fixed please?

I jave checked and the resource principal access from the database is all set as it should be. In fact, I’ve given it an extra one

The only known issue is the one I mentioned – does that capture your use case, the multiple levels of nested compartments?

Hi Jeff,

What are the pre-requisites of importing via Database Actions DataPump ? In OCI we execute EXEC DBMS_CLOUD_ADMIN.ENABLE_RESOURCE_PRINCIPAL(); but in on-prems while selecting the directory and then specifying the filename it says “Error while importing the data File does not exist in the directory” whereas File exist. Th rest enabled schema has read/write on this directory and the file permissions are correct.

Regards

Nitish

For on-premises, simply have the DMP file in the database directory, and it should be fine. I just tried it and should be working as designed.

Hi Jeff,

Thanks for this feature.

How to take an export Data Pump from Oracle Autonomous Database (always free)?

Best regards,

Saeed

See today’s post – thanks for the question!