This feature has been around, for a LONG time:

I have a local 19c database, running on my Mac via VirtualBox appliance, with a decent amount of data in it, that I’d like to move up to my Oracle Cloud instance.

Let me clarify a few things:

- decent amount of data = more than just base HR samples, and interesting data at that, but not hundreds of millions of rows

- Oracle Cloud = Oracle Autonomous Data Warehouse Service, running out of Ashburn data center in OCI

Could I use this ‘old’ wizard to move up my data?

Sure.

It will be easy. Just follow the wizard.

It will be good. It will grab all of my data, and help me if some stuff doesn’t make it through.

It will be..within acceptable limits of slow. The copy tool will do row-by-row inserts over JDBC, going across the Internet.

If I want FAST, then we need to look at using the Cart or Data Pump. Those steps are also GOOD, but aren’t so EASY. Data Pump requires Server Side access on the source, and both require knowledge, experience with using Oracle Storage Service and their APIs.

When I say it’s not ‘easy’ – I’m coming from the perspective of experience. Of course, if if you’ve done it before, it is pretty easy.

But, if this is your first rodeo, then Database Copy is going to be easiest path to walk.

The data, just how much are we talking?

Across my 19 tables, I’ll be moving up about 3.7M rows. Again, not a lot, but a decent amount for doing blogs, testing, and playing around.

Let’s move up the tables and data!

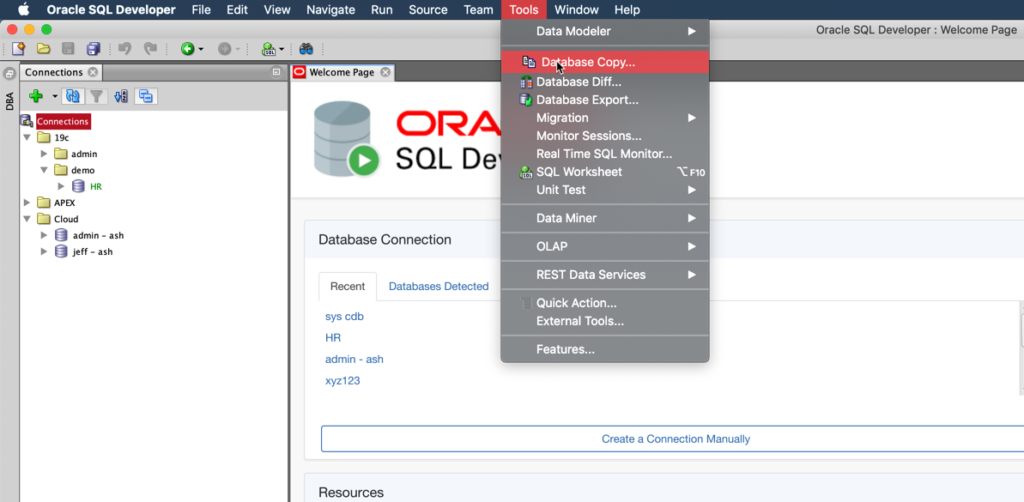

I’m going to use the Wizard, under the Tools menu, and I already have a schema (JEFF) created on ADW, and connections for both my target and source databases.

I’ve said this before, and I’ll say it again – don’t use the DEFAULT ADMIN account in Autonomous for your application work. Create a new USER, and use THAT.

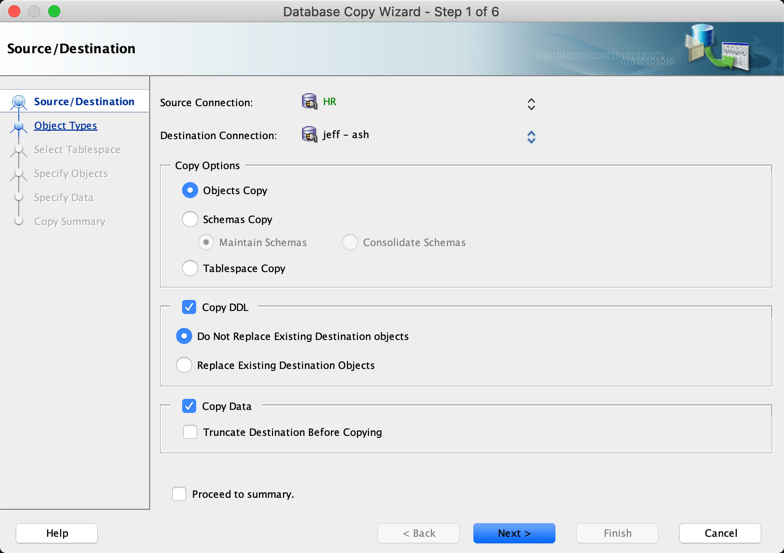

Anyways, here’s Step 1 of 6 in the Database Copy Wizard:

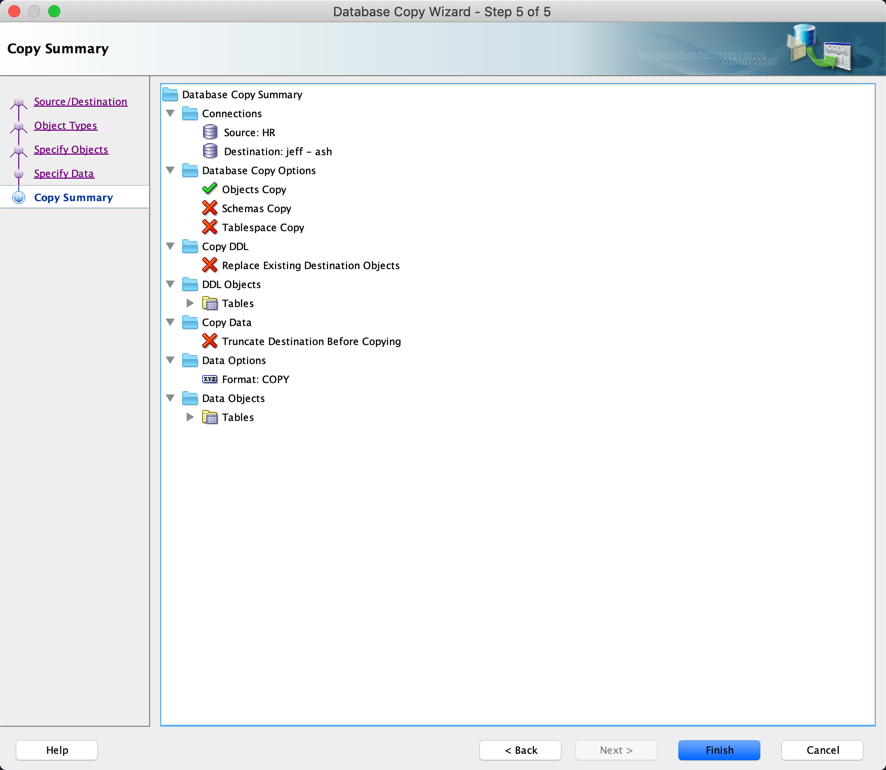

So I’m saying, these objects don’t exist yet, please create them for me. And when you’re done doing that, please copy the data as well.

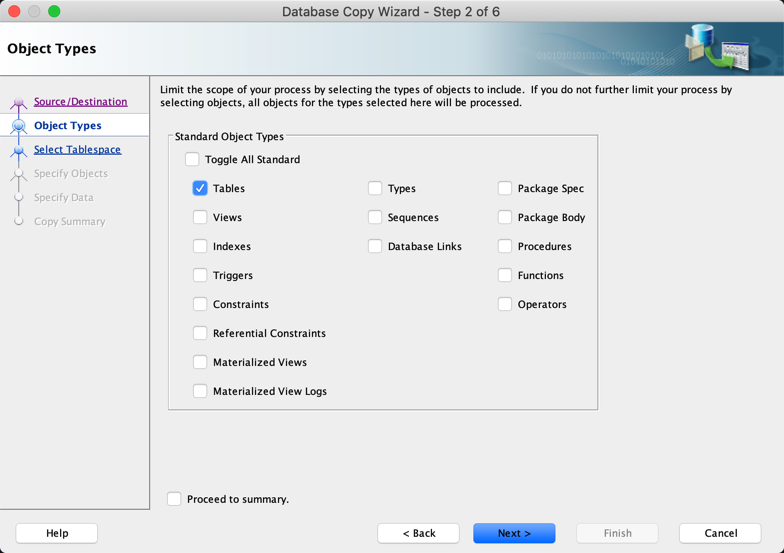

By default we grab everything, but I just want the TABLEs. And at that, I only want SOME of the tables.

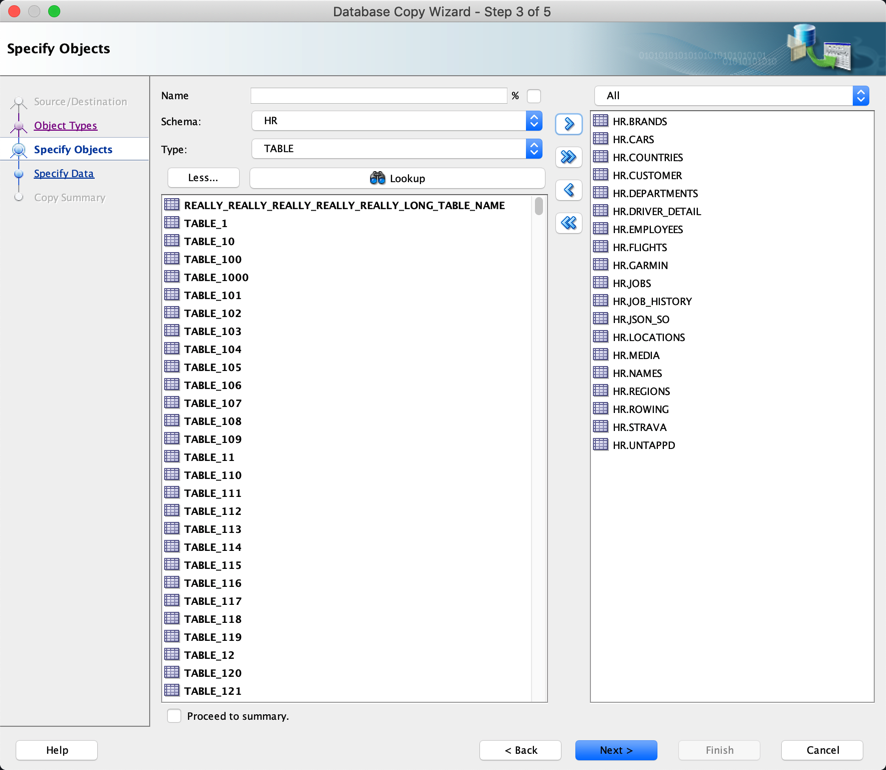

Just drag over the tables you want to copy. You can move around schemas, but they’re going to get created IN the target schema, that is, the USER that is defined in the Target connection.

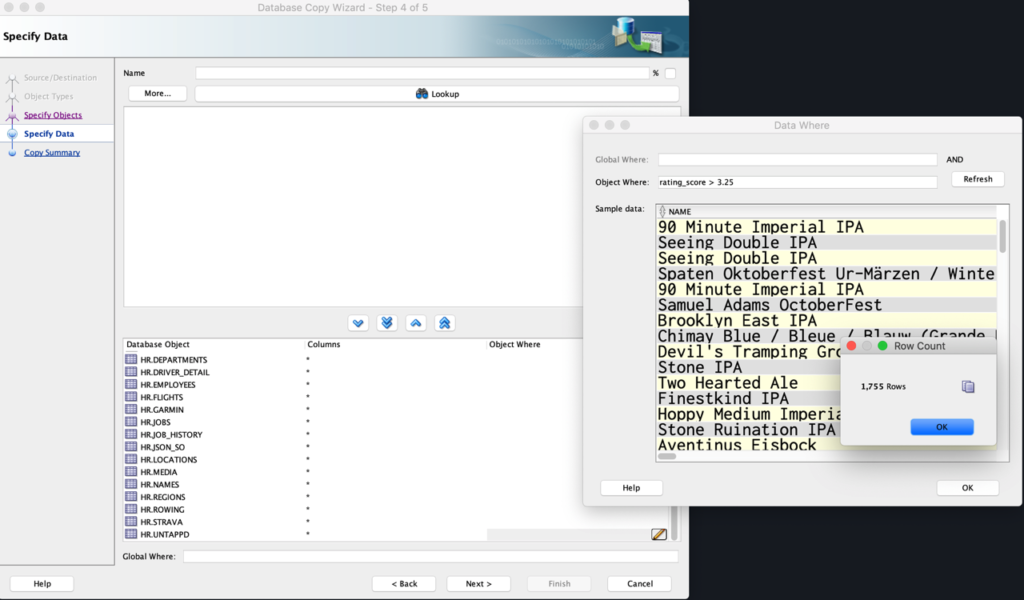

Don’t want all the data?

I only want my ‘good’ beers to come over. If I had an application ID, common across all tables, I could use a GLOBAL WHERE clause to just pull a subset of records from all the tables.

Time to review our settings…

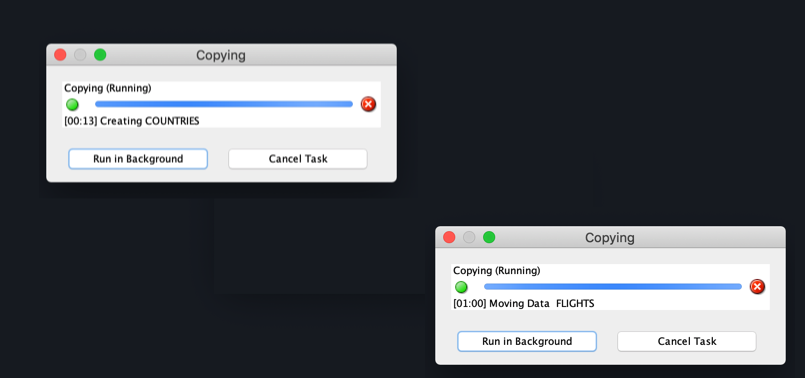

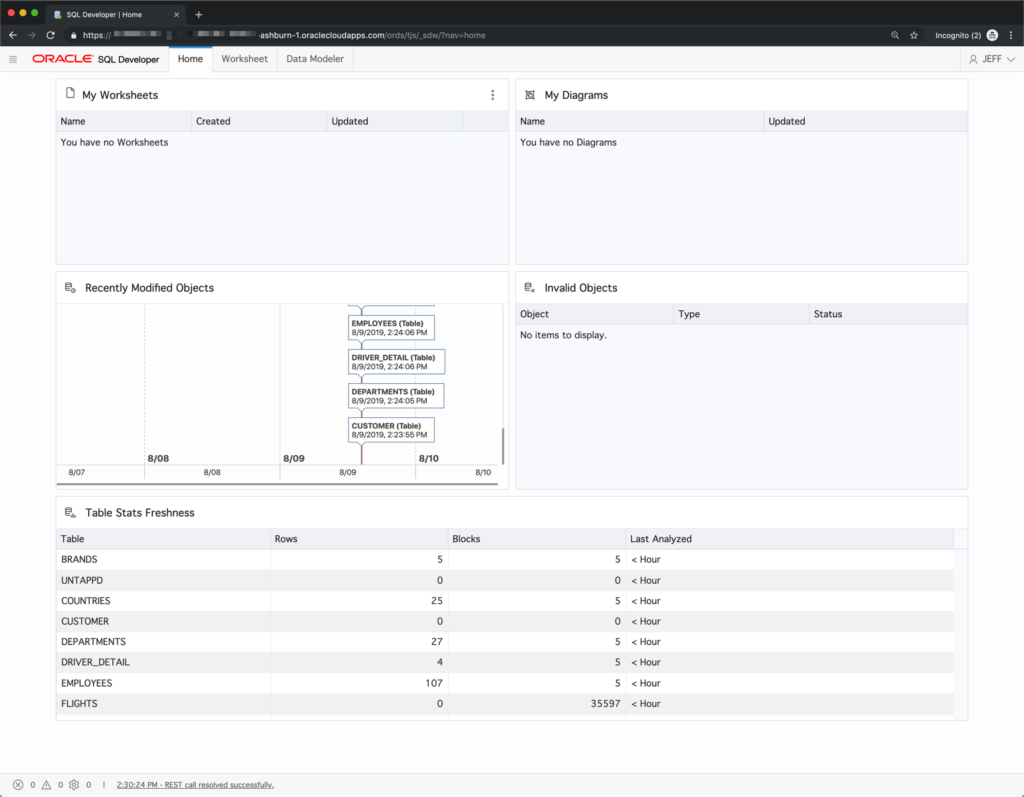

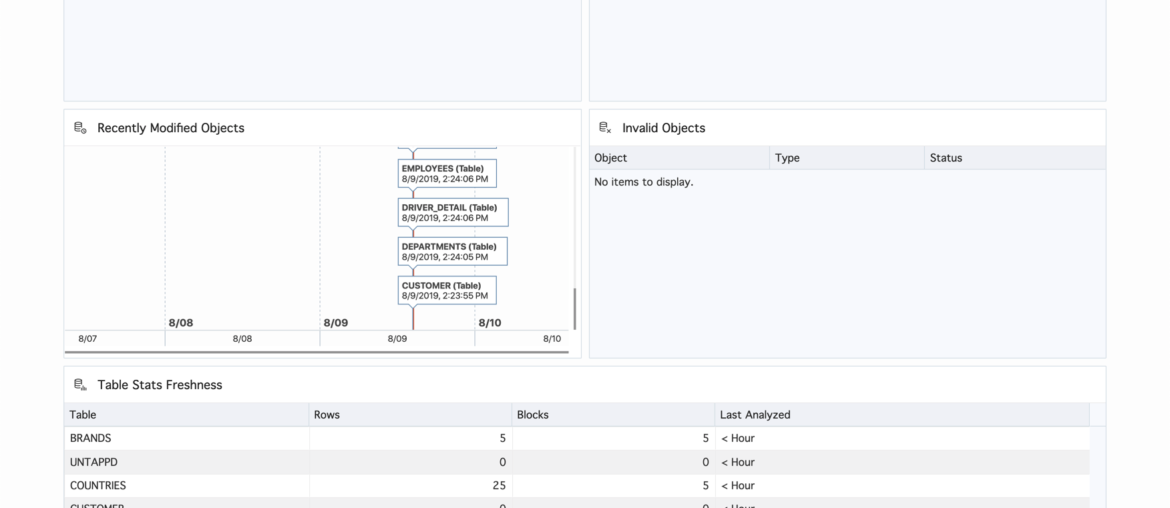

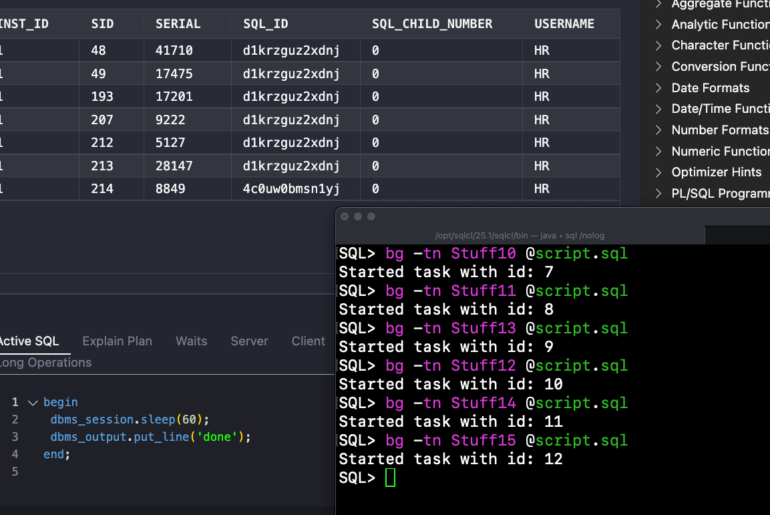

As it’s running, I can use the SQL Developer Web dashboard to see what’s happening…the Autonomous Database automatically collects stats for my objects as they’re being created.

And about 10 minutes later, the session will finish.

SQL Developer on my desktop gives me a summary of the operation, or I can view the detailed log.

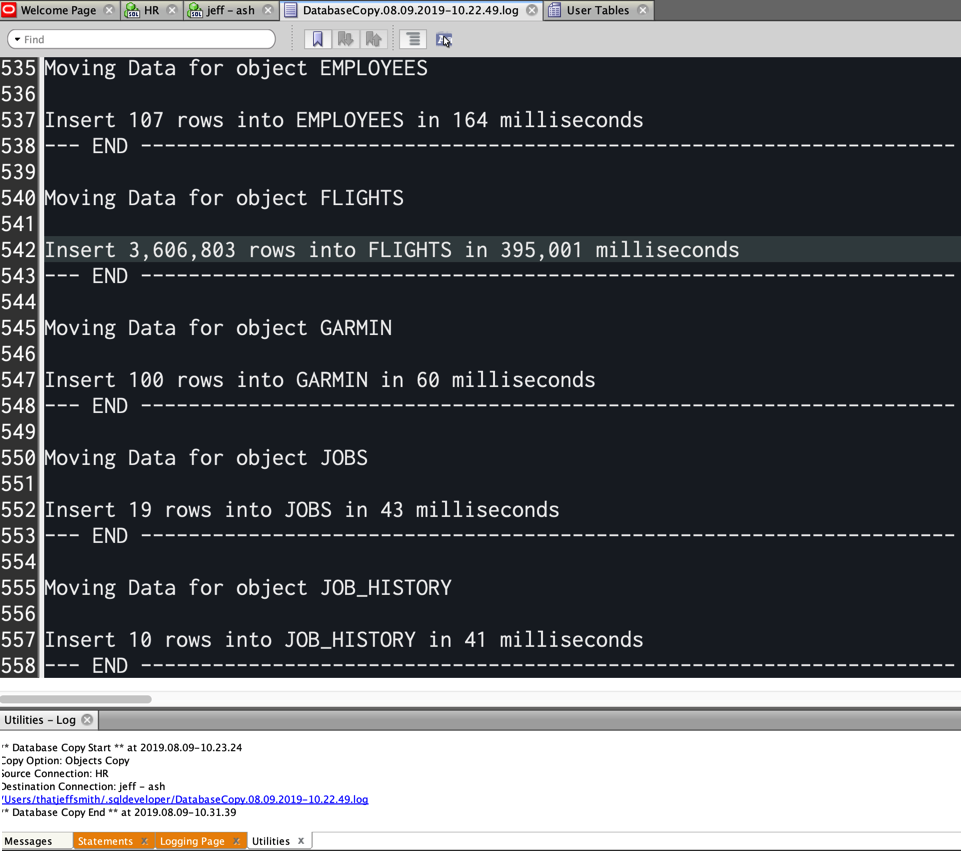

So it took 8 minutes and 15 seconds to move up the 3.7M rows. And you can see, by far the largest table, FLIGHTS took the bulk of that time.

FLIGHTS took 6.5 minutes to move up 3.6M rows, or about 16,000 rows/second? My math is horrible, but I’m sure Connor will step by and correct me if necessary.

So, it is INDEED easy, but not really FAST when we’re thinking in terms of what Data Pump or SQL Loader/External Tables could handle. The nice thing is, you get to choose which way to go when you’re ready to do the task at hand.

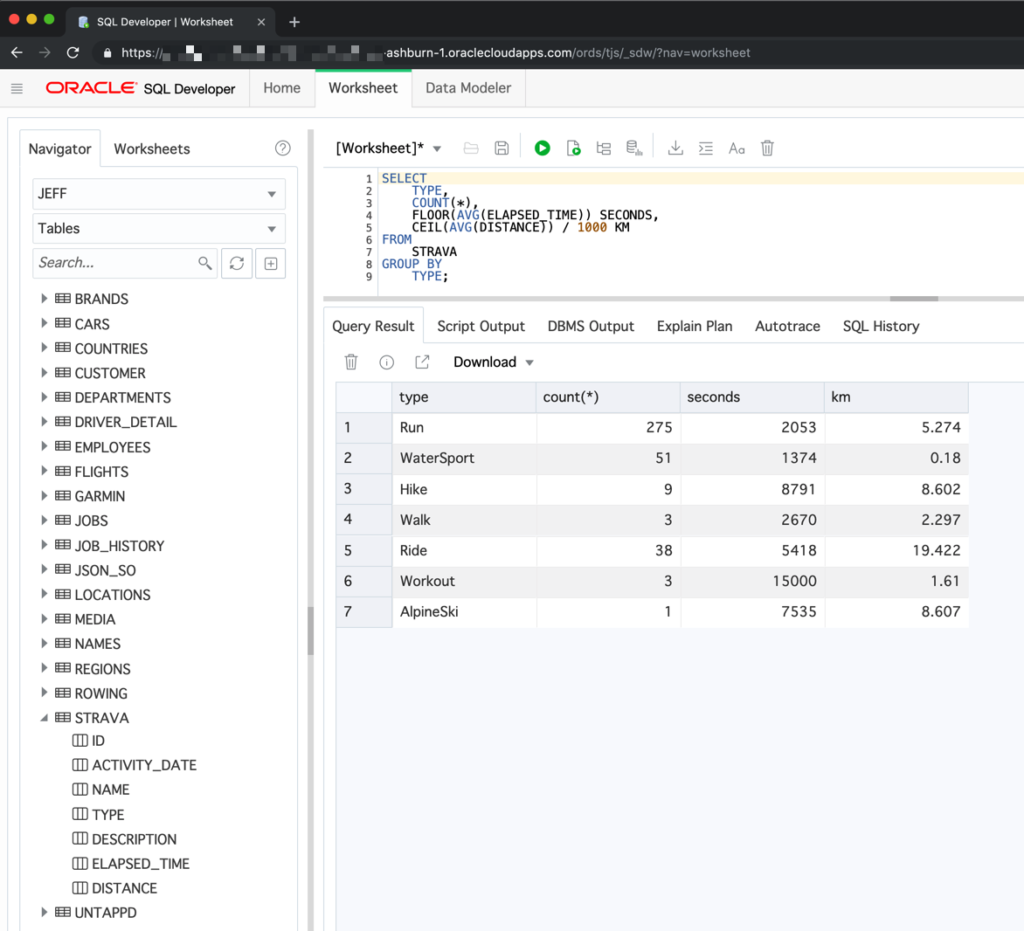

Oh, and of course I can now use SQL Developer Web to play with my data in ADW

2 Comments

Jeff

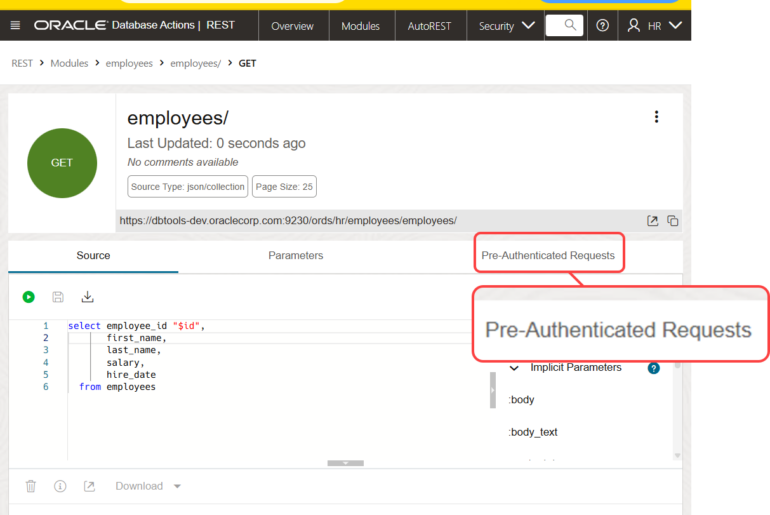

It appears database copy doesnt include ORDS schema objects?

At least last time I did this it wasn’t included.

I understand why it is that way now and also know how to export/re-import ords modules however but it would be nice to be able to do this with 1 click of a button on the database copy wizard step 2 of 6

standard object types form

They’re technically not objects belonging to that user. They’re items in the ORDS_METADATA repository, which are assigned to your schema.

Updating the Database Copy/Export to include RESTful Services and REST Enabled objects is a good idea, I’ll log that today.