Ok that title has a TON of buzz and marketing words in it.

But, we have the Oracle Cloud. And available there is a service where we take care of your database for you – Autonomous. We make sure it’s up, and that it’s fast.

We have 1 autonomous cloud service today and will have and second one coming SOON.

These services come with an S3 compatible Object Store OSS (Oracle Object Storage, complete with S3 API support) , so you can move data/files around.

For the new Autonomous Transaction Processing (ATP) Service, this feature in SQL Developer will be available in version 18.3 of SQL Developer

In SQL Developer version 18.1 and higher, we make it pretty easy to take data files you may have uploaded to your OSS and load that data to new or existing tables in your Autonomous DB Service.

We basically make the files available to the database, map the columns just right, and then make calls to DBMS_CLOUD (Docs) for getting the data in there. DBMS_CLOUD is an Oracle Cloud ONLY package that makes it easy to load and copy data from OSS.

We also make it very easy to TEST your scenarios – find bad records, fix them, and run again if necessary.

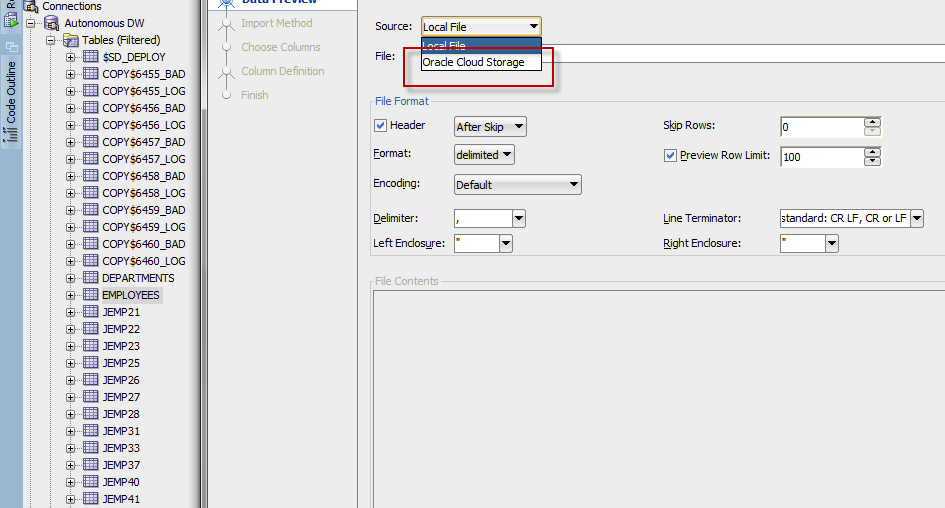

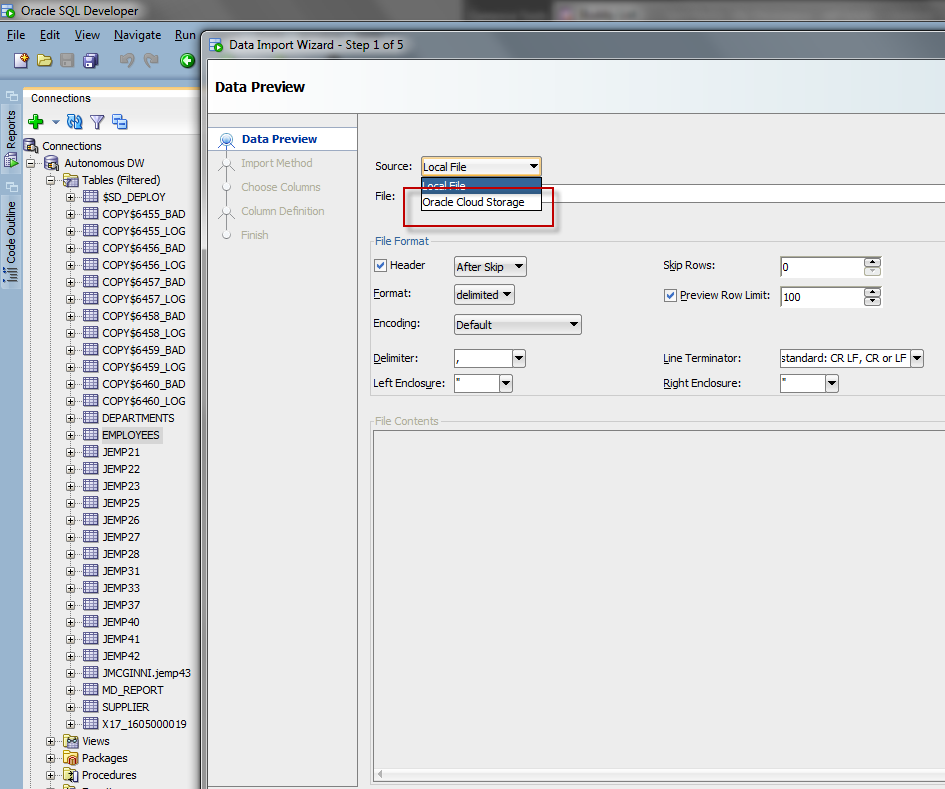

This is all done with a very familiar interface, the Import Data dialog.

If your existing database connection is of type ‘Cloud PDB’, then when you get to the first page, you’ll see this available in the source data drop down.

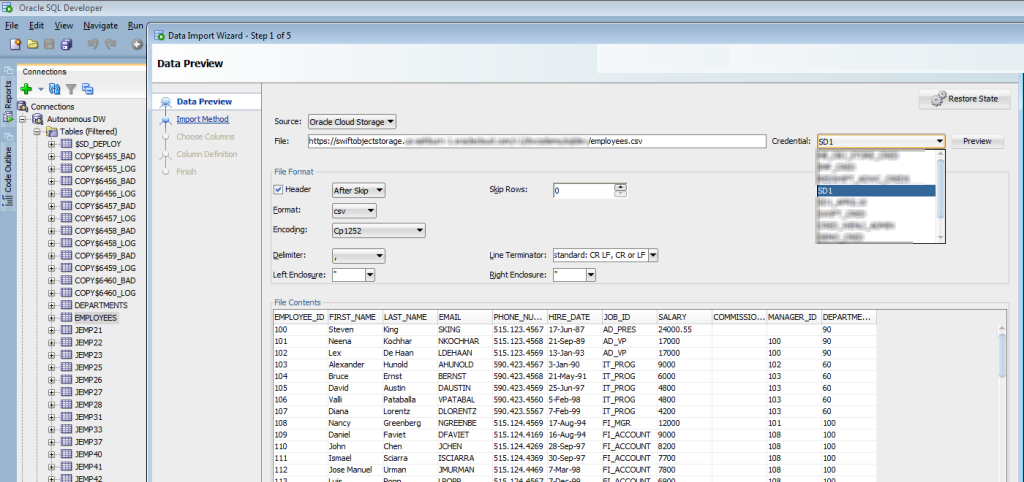

Then you need to select your proper credentials and tell us which file you want (we do NOT have a OSS file browser TODAY, but we do want one.) So you need to have the URL handy.

Paste it in, and hit the preview button.

This will work for NEW or Existing tables. For this scenario I’m going with an Existing table.

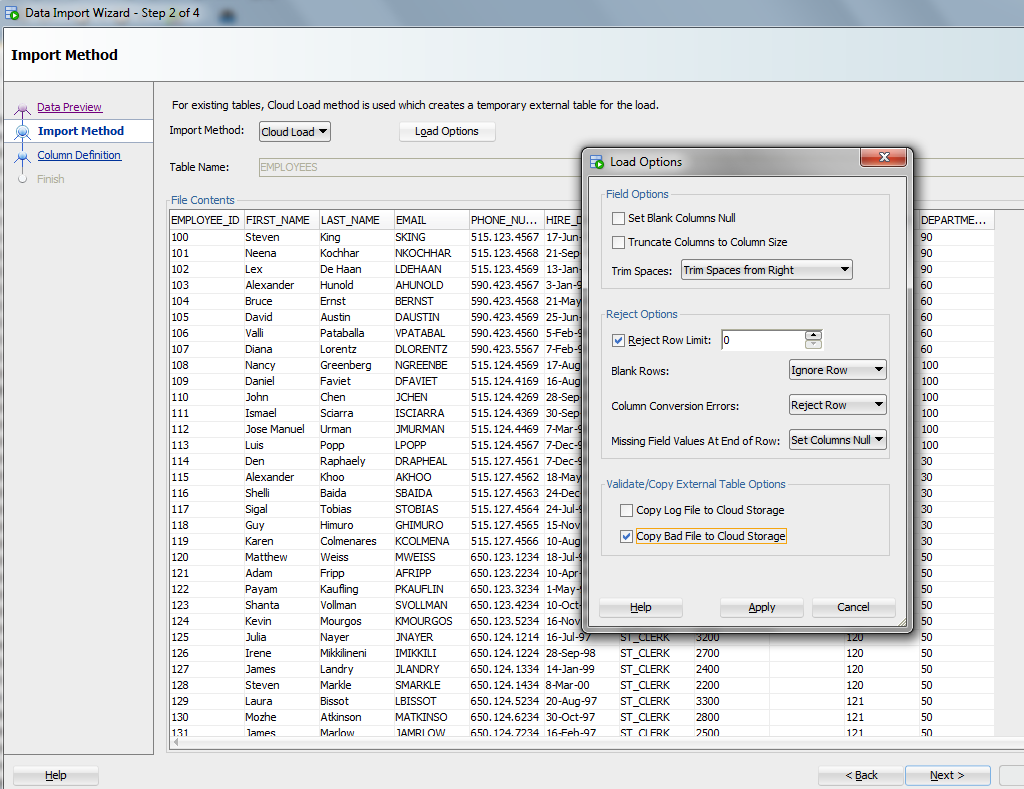

The next page is almost the same as you’re used to, but a few important differences:

Once you have your options in a happy place, the rest of the wizard is pretty much the same..until you get to the Test dialog.

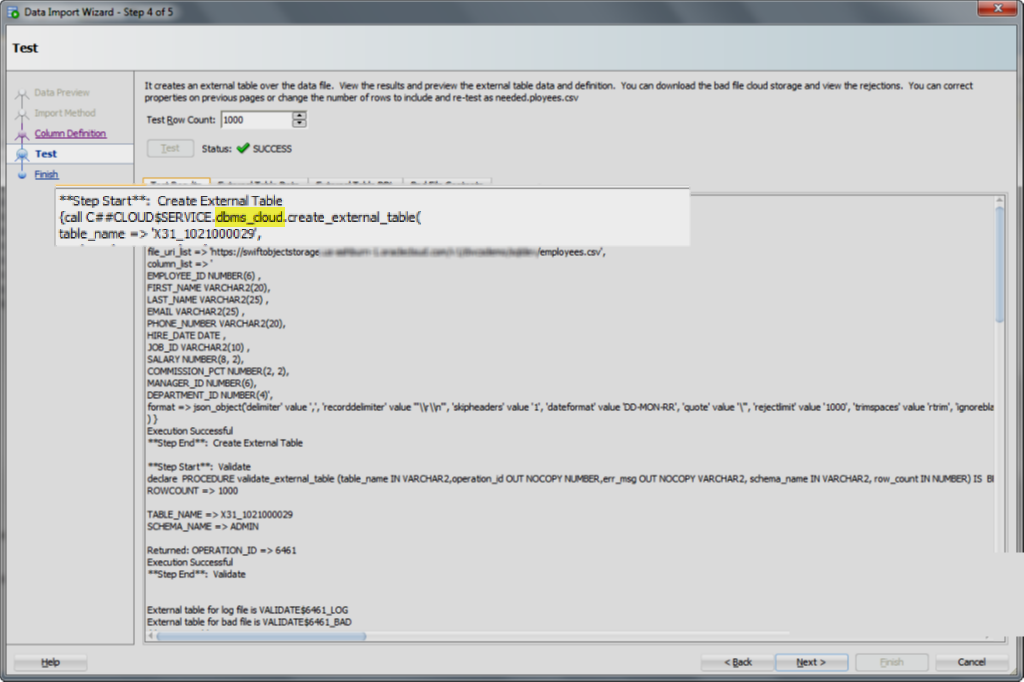

This is where it gets FUN

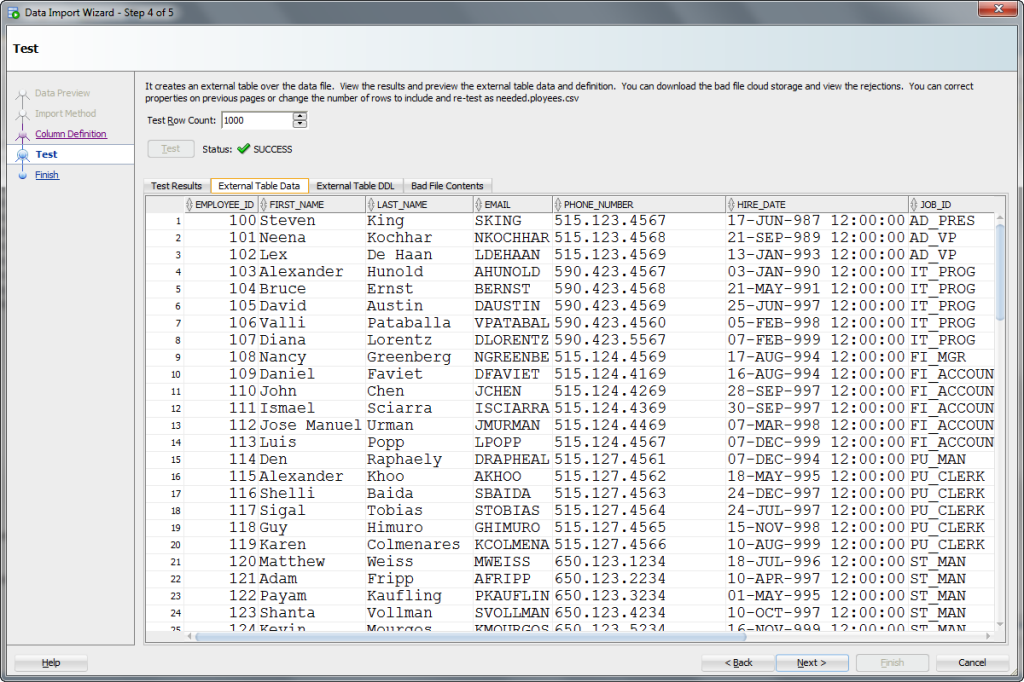

Let’s imagine you are a genius who never makes mistakes. You’ll get to witness yourself in all your glory when you run the test and see SUCCESS, a populated External Table Data panel and an EMPTY bad file contents panel.

So what we’re trying to achieve here is saving you a LOT of wasted time. We want to make sure the scenario works for say the first 1,000 records before we go to move the ENTIRE file over and process it. If there IS a problem, you can fix it now.

The test will simply create the External table and show the results of trying to query it via your load parameters as defined in the previous screens.

So it looks like it’s worked. Let’s go preview the data.

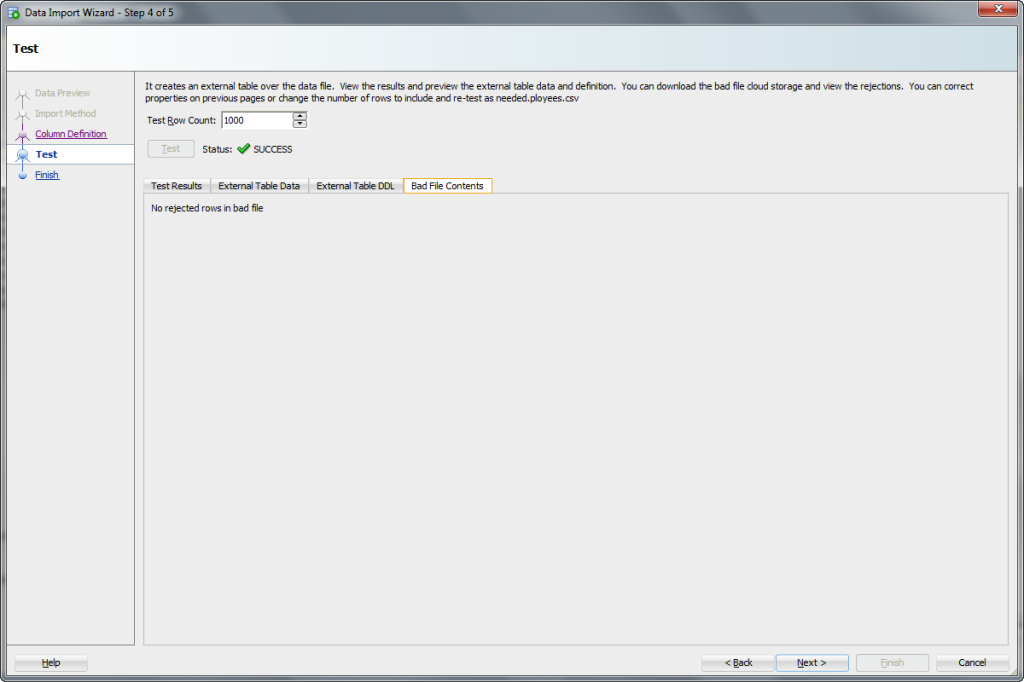

And just to make sure, let’s go peak at the rejected (Bad File Contents) panel.

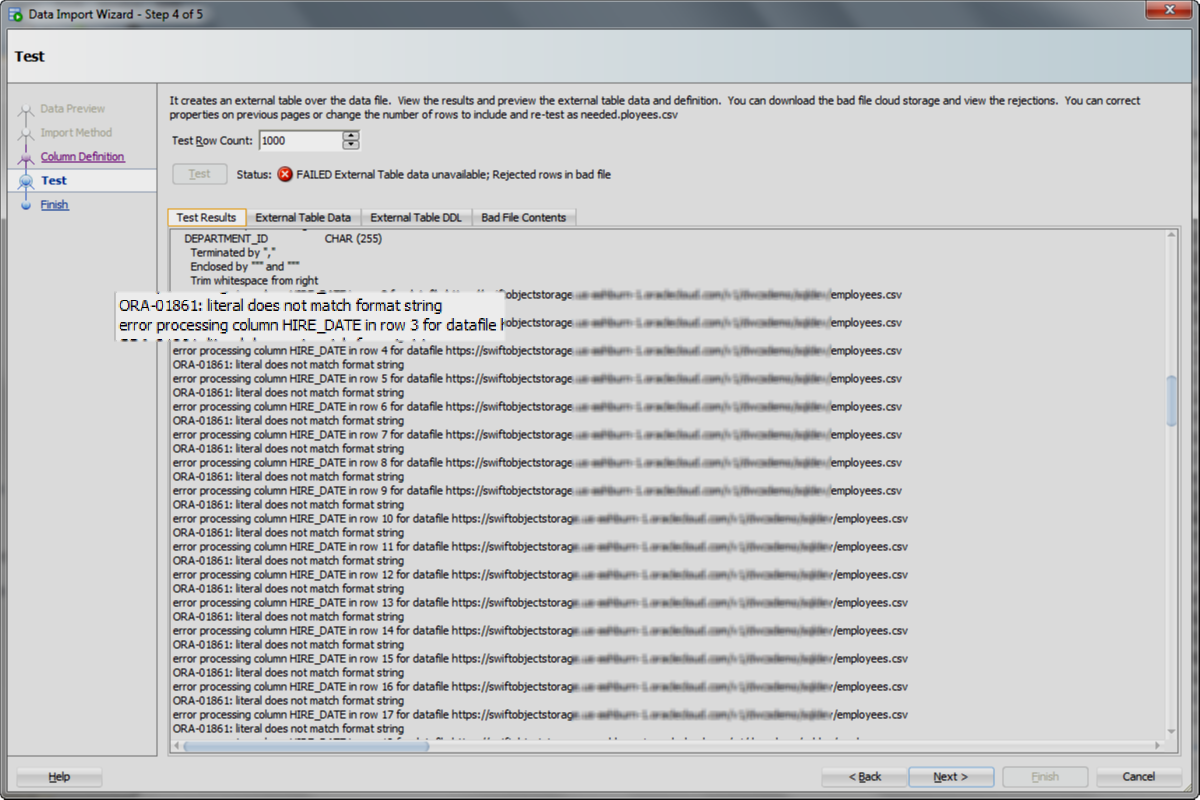

But Jeff, I’m not perfect, I made a boo-boo.

No worries, let’s see what it looks like when there is problem with the definition of the external table or with the data, or both.

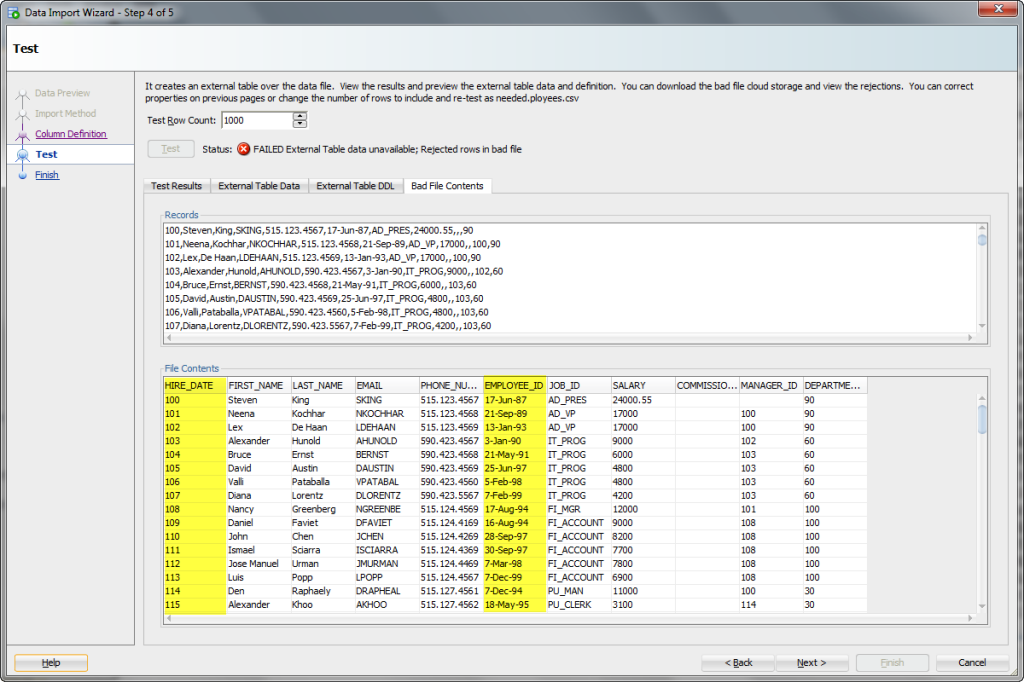

Man, it sure would be nice to SEE what that rejected row looks like.

Just click on the Bad File Contents!

So instead of starting over, just go back in the wizard, re-map the columns, test again.

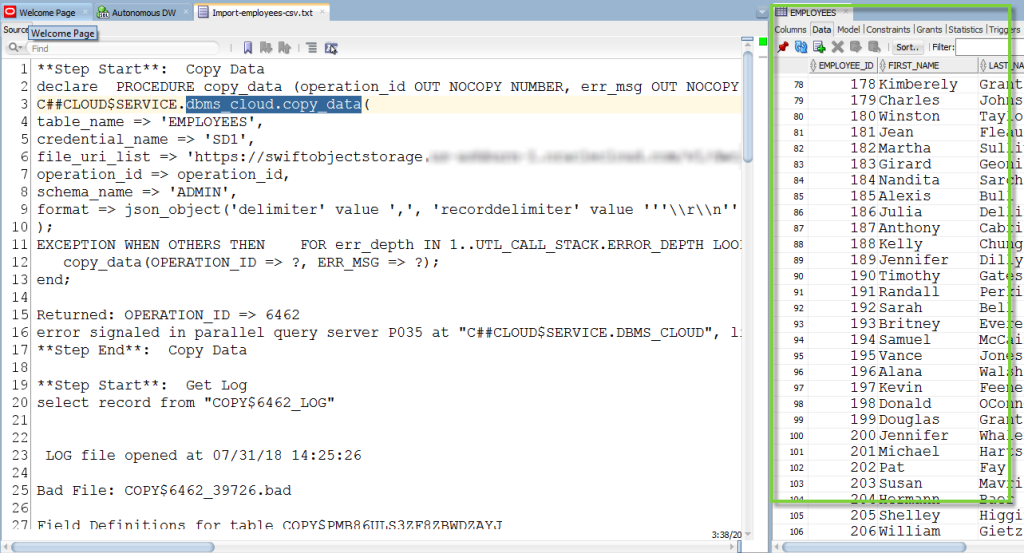

And THEN click ‘Finish’ to actually run the full scenario. And then when were done, we’ll have a log of the complete scenario and we can browse the table.

The Table!

We open the log of the scenario for you, and then you can manually browse the table like you’re used to. Or get to doing your cool reports, graphs, and SQL stuff.

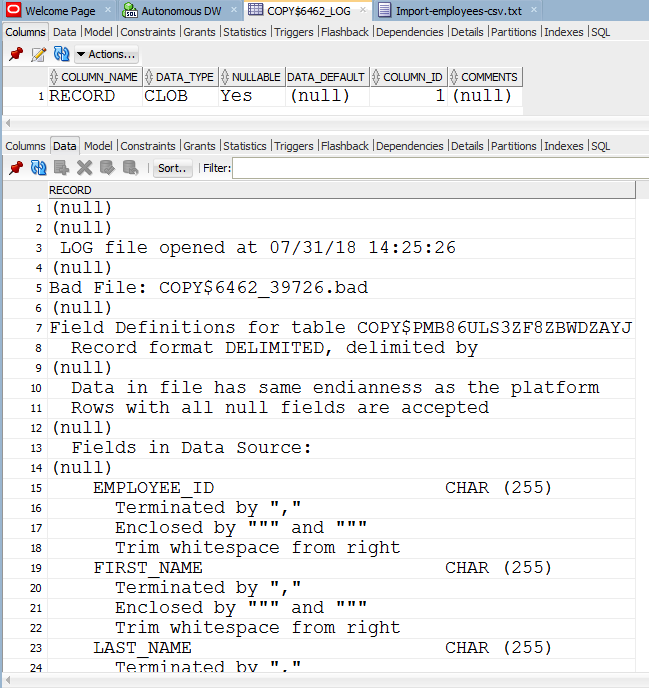

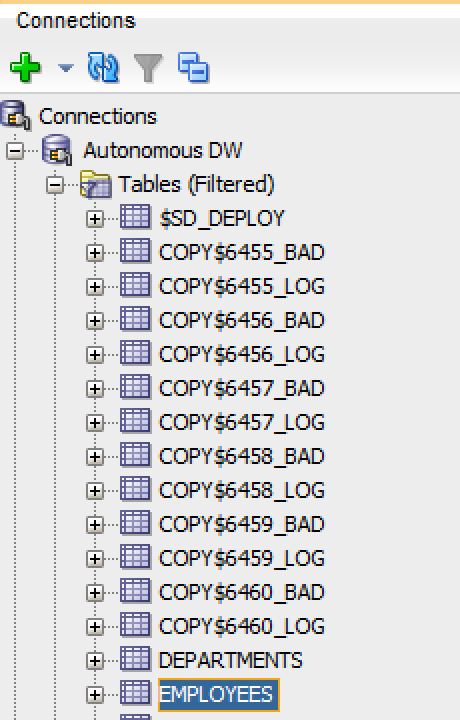

What are these tables?

You can nuke/drop these as needed, but they’re basically just a collection of CLOBs that show the contents of the logs and bad file from your SQL Dev DBMS_CLOUD runs.

What’s Coming Next?

We’re enhancing the Cart so you create deployments of multiples files and tables in a single scenario. And then run those as often as necessary.

We’re also working on SQL Developer Web so that there is a data loading facility there so you can get rocking and rolling right away without even having to pull up the desktop tool.

More news here later this year.