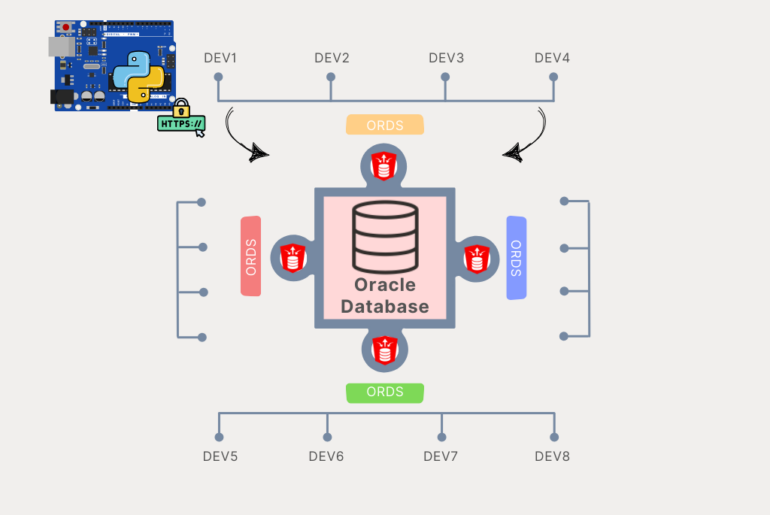

We introduced management API’s for the Oracle Database with Oracle REST Data Services (ORDS) version 19.1, and I talked about that here.

With ORDS 19.2 available as of last week, we have a few updates and toys for you to take advantage of.

You can find the complete list of DB API endpoints and the swagger doc here.

Or, if you find yourself on the official Database Docs page, you’ll find a link for the REST APIs there as well.

These APIs are available and supported for 11gR2 and the supported versions of 12, 18, and 19 – not just for 19c.

So what’s new?

The two big things of note are:

- improved Data Pump support

- Database Creation Assistant (DBCA)

For Data Pump, we have a more flexible setup for defining your Data Pump jobs AND we now also support EXPORTs and IMPORTs.

So let’s do an EXPORT, so I can then demo doing an IMPORT.

I’m just going to export two tables.

POST http://localhost:8080/ords/hr/_/db-api/19.2.0/database/datapump/export

And here’s my POST request body:

{

"datapump_dir": "DATA_PUMP_DIR",

"filter": "EMPLOYEES_COPY, HOCKEY_NO_PARTS",

"job_mode": "TABLE",

"threads": 2

}

When I make the request, ORDS replies with this response:

{

"job_name": "DATAPUMP_REST_EXPORT_20190805105213",

"owner_name": "HR",

"operation": "EXPORT",

"job_mode": "TABLE",

"state": "EXECUTING",

"degree": 2,

"attached_sessions": 0,

"datapump_sessions": 2,

"job_state": "EXECUTING",

"links": [

{

"rel": "collection",

"href": "http://localhost:8080/ords/hr/_/db-api/19.2.0/database/datapump/jobs/"

},

{

"rel": "describedby",

"href": "http://localhost:8080/ords/hr/_/db-api/19.2.0/metadata-catalog/"

},

{

"rel": "related",

"href": "http://localhost:8080/ords/hr/_/db-api/19.2.0/database/datapump/jobs/HR,DATAPUMP_REST_EXPORT_20190805105213/EXPDAT-2019-08-05-10_52_13.LOG"

},

{

"rel": "self",

"href": "http://localhost:8080/ords/hr/_/db-api/19.2.0/database/datapump/jobs/HR,DATAPUMP_REST_EXPORT_20190805105213/"

}

]

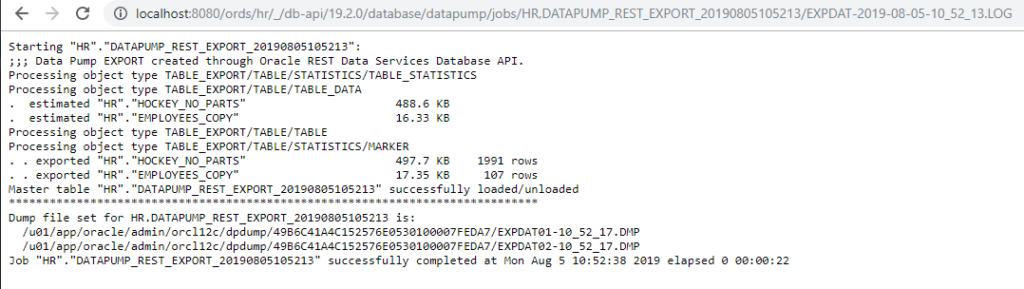

}There’s a link there to follow to get a LOG for the job, which I do follow and see what happened:

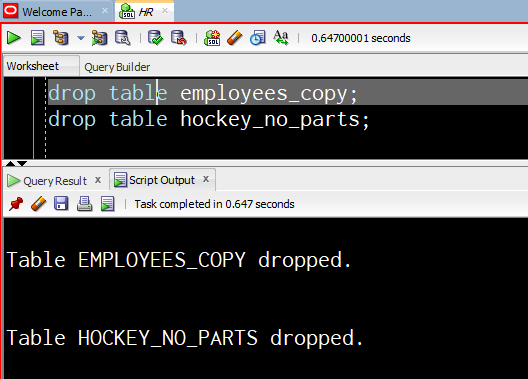

Now let’s bring it back!

Doing the import.

POST http://localhost:8080/ords/hr/_/db-api/19.2.0/database/datapump/import

And our VERY simple POST request body:

{

"datapump_dir": "DATA_PUMP_DIR",

"job_mode":"FULL",

"file_name":"EXPDAT%U-10_52_17.DMP"

}

I have 2 DMP files from the last job, so I have to use the %U to grab both of them for the IMPORT job.

And our response…

{

"job_name": "DATAPUMP_REST_IMPORT_20190805120954",

"owner_name": "HR",

"operation": "IMPORT",

"job_mode": "FULL",

"state": "EXECUTING",

"degree": 1,

"attached_sessions": 0,

"datapump_sessions": 2,

"job_state": "EXECUTING",

"links": [

{

"rel": "collection",

"href": "http://localhost:8080/ords/hr/_/db-api/19.2.0/database/datapump/jobs/"

},

{

"rel": "describedby",

"href": "http://localhost:8080/ords/hr/_/db-api/19.2.0/metadata-catalog/"

},

{

"rel": "related",

"href": "http://localhost:8080/ords/hr/_/db-api/19.2.0/database/datapump/jobs/HR,DATAPUMP_REST_IMPORT_20190805120954/EXPDAT-2019-08-05-10_52_13.LOG"

},

{

"rel": "related",

"href": "http://localhost:8080/ords/hr/_/db-api/19.2.0/database/datapump/jobs/HR,DATAPUMP_REST_IMPORT_20190805120954/IMPDAT-2019-08-05-12_09_54.LOG"

},

{

"rel": "self",

"href": "http://localhost:8080/ords/hr/_/db-api/19.2.0/database/datapump/jobs/HR,DATAPUMP_REST_IMPORT_20190805120954/"

}

]

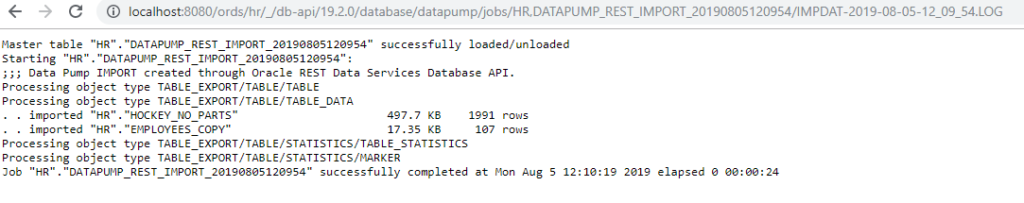

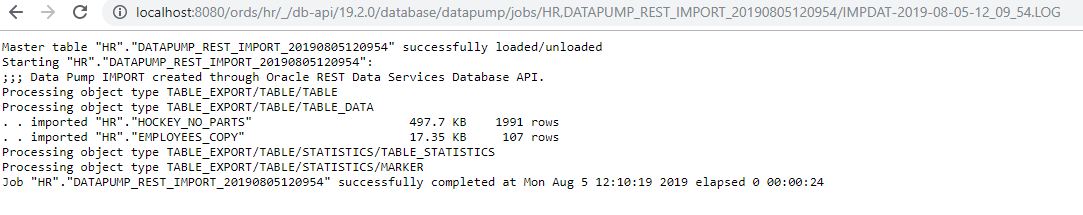

}And if we pull up the log…

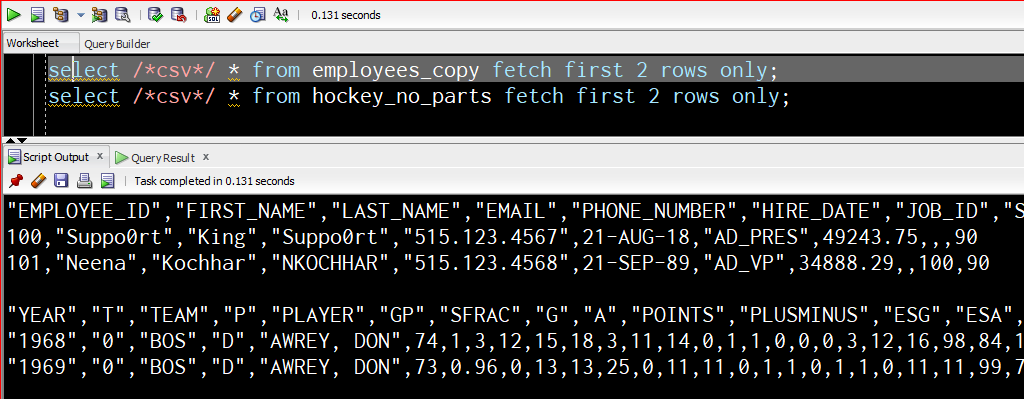

And let’s just query the data again to be sure…

There are now two versions of the API you can use.

/19.1.0/ /19.2.0/

And then there is /latest/ – which is a shortcut for /19.1.0/. If you try to POST to /19.1.0/…/import, you’ll get a 404, because it’s new for 19.2.

3 Comments

basically for import process is there any way in which I can give the path to the local directory where dumo files are present then ORDS will kind of move the files to the DUMP directory and then start the import process?

ords can’t move files on the database’s underlying OS

but the database maybe can…with a stored java procedure or even PL/SQL

When ords can do the job of providing the dump files to the user after the export is there any way in which ords can facilitate the movement of files to the database from some xyz location