Do as I say, not as I do.

Because I am like most of you, I am very lazy.

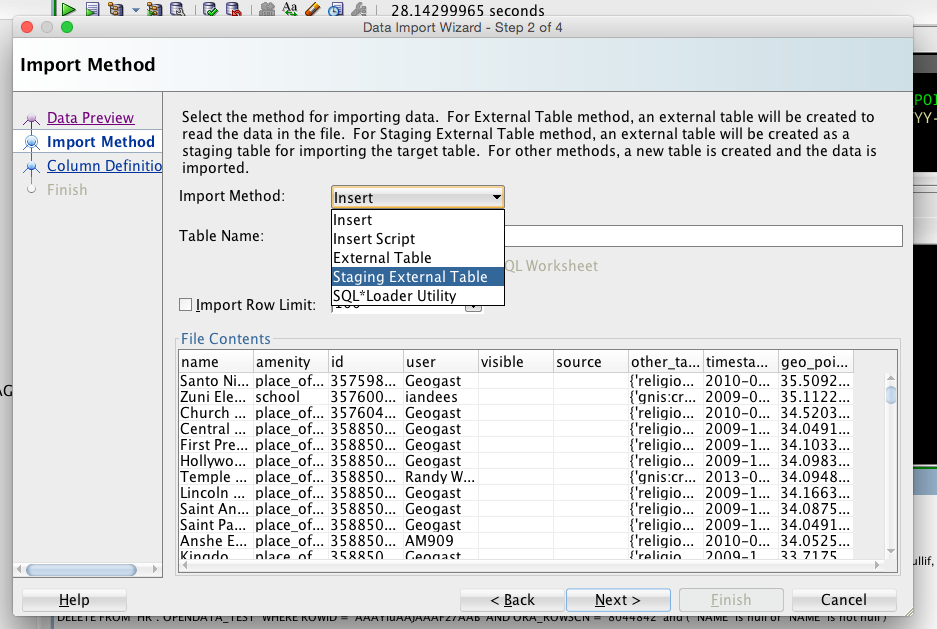

Case in point: loading some data from a CSV into an Oracle table. I can use a wizard in SQL Developer and in a few clicks, have it loaded. Usually I’m playing with a hundred rows. Or maybe a few thousand.

But this time I needed to load up about 150MB of CSV, which isn’t very much. But it’s 750k rows, and it was taking more than 5 minutes to run the INSERTs against the table. And I thought that was pretty good, considering. My entire setup is running on a MacBook Air and our OTN VirtualBox Database image.

I’m setting up a scenario that others can run, and the entire lab is only allotted 30 minutes. So I can’t reserve 10 minutes of that just to do the data load.

The Solution: EXTERNAL TABLE

If you have access to the database server via a DIRECTORY object, then you are good to go. This means I can put the CSV (or CSVs) onto the server, in a directory that the database can access.

This wizard is pretty much exactly the same as I’ve shown you before. There’s an additional dialog, and the output is a script that you run.

You need to give us the database directory name, the name of your file, and if you want an errors and logging file, what you want to call them as well.

But when we’re done, we get a script.

The script will create the directory, if you need it, grants privs, if you need them, and drop your staging table, if you want to. That’s why those steps are commented out.

And I tweaked my script even further, changing out the INSERT script to include a function call…but setting up the table from the CSV file was a lot easier using the wizard.

SET DEFINE OFF --CREATE OR REPLACE DIRECTORY DATA_PUMP_DIR AS '/Users/oracle/data_loads; --GRANT READ ON DIRECTORY DATA_PUMP_DIR TO hr; --GRANT WRITE ON DIRECTORY DATA_PUMP_DIR TO hr; --drop table OPENDATA_TEST_STAGE; CREATE TABLE OPENDATA_TEST_STAGE ( NAME VARCHAR2(256), AMENITY VARCHAR2(256), ID NUMBER(11), WHO VARCHAR2(256), VISIBLE VARCHAR2(26), SOURCE VARCHAR2(512), OTHER_TAGS VARCHAR2(4000), WHEN VARCHAR2(40), GEO_POINT_2D VARCHAR2(26) ) ORGANIZATION EXTERNAL ( TYPE ORACLE_LOADER DEFAULT DIRECTORY ORDER_ENTRY ACCESS PARAMETERS (records delimited BY '\r\n' CHARACTERSET AL32UTF8 BADFILE ORDER_ENTRY:' openstreetmap-pois-usa.bad' DISCARDFILE ORDER_ENTRY:' openstreetmap-pois-usa.discard' LOGFILE ORDER_ENTRY:' openstreetmap-pois-usa.log' skip 1 FIELDS TERMINATED BY ';' OPTIONALLY ENCLOSED BY '"' AND '"' lrtrim missing FIELD VALUES are NULL ( NAME CHAR(4000), AMENITY CHAR(4000), ID CHAR(4000), WHO CHAR(4000), VISIBLE CHAR(4000), SOURCE CHAR(4000), OTHER_TAGS CHAR(4000), WHEN CHAR(4000), GEO_POINT_2D CHAR(4000) ) ) LOCATION ('openstreetmap-pois-usa.csv') ) REJECT LIMIT UNLIMITED; SELECT * FROM OPENDATA_TEST_STAGE WHERE ROWNUM <= 100; INSERT INTO OPENDATA_TEST ( NAME, AMENITY, ID, WHO, VISIBLE, SOURCE, OTHER_TAGS, WHEN, GEO_POINT_2D ) SELECT NAME, AMENITY, ID, WHO, VISIBLE, SOURCE, OTHER_TAGS, to_timestamp_tz(COL_TIMES, 'YYYY-MM-DD"T"HH24:MI:SSTZR'), GEO_POINT_2D FROM OPENDATA_TEST_STAGE3 ;

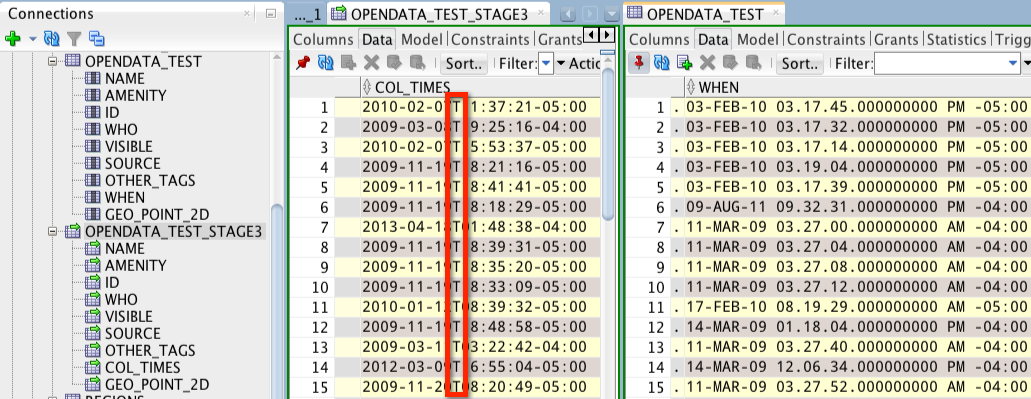

A Small Tweak

My TABLE has a timestamp column. I REFUSE to store DATES as strings. It bites me in the butt EVERY SINGLE TIME. So what I did here, because I’m lazy, is I loaded up the EXTERNAL TABLE column containing the TIMESTAMP as a VARCHAR2. But in my INSERT..SELECT, I throw in a TO_TIMESTAMP to do the conversion.

The hardest part, for me, was figuring out the format that represented the timestamp data. After a few trial and errors I managed that

2009-03-08T19:25:16-04:00 equated to a YYYY-MM-DD”T”HH24:MI:SSTZR. I got tripped up because I was single quote escaping the ‘T’ instead of double quoting it “T.” And then I got tripped up again because I was using TO_TIMESTAMP() vs TO_TIMESTAMP_TZ().

With my boo-boos fixed, instead of taking 5, almost 6, minutes to run:

747,973 ROWS inserted. Elapsed: 00:00:27.987 Commit complete. Elapsed: 00:00:00.156

Not too shabby. And the CREATE TABLE…STORAGE EXTERNAL itself is instantaneous. The data isn’t read in until you need it.

Last time I checked, 28 seconds vs 5 minutes is a lot better. Even on my VirtualBox database running on my MacBook Air.

14 Comments

External tables is more flexible and faster

Yes, but require access to the DB server’s filesystem.

After following all the steps, I created this :

SET DEFINE OFF

–CREATE OR REPLACE DIRECTORY EXT_TAB_DIR AS ‘D:\R\ADI’;

–GRANT READ ON DIRECTORY EXT_TAB_DIR TO USER;

–GRANT WRITE ON DIRECTORY EXT_TAB_DIR TO USER;

–drop table EXT_LOAD_STAGE;

CREATE TABLE EXT_LOAD_STAGE

( ID NUMBER(20),

NAME VARCHAR2(100),

INDUSTRY VARCHAR2(100),

INCEPTION NUMBER(20),

EMPLOYEES NUMBER(20),

STATE VARCHAR2(100),

CITY VARCHAR2(100),

REVENUE VARCHAR2(100),

EXPENSES VARCHAR2(100),

PROFIT NUMBER(20),

GROWTH VARCHAR2(100))

ORGANIZATION EXTERNAL

( TYPE ORACLE_LOADER

DEFAULT DIRECTORY EXT_TAB_DIR

ACCESS PARAMETERS

(records delimited BY ‘\r\n’

NOBADFILE

NODISCARDFILE

NOLOGFILE

skip 1

fields terminated BY ‘,’

OPTIONALLY ENCLOSED BY ‘”‘ AND ‘”‘

lrtrim

missing field VALUES are NULL

( ID CHAR(4000),

NAME CHAR(4000),

INDUSTRY CHAR(4000),

INCEPTION CHAR(4000),

EMPLOYEES CHAR(4000),

STATE CHAR(4000),

CITY CHAR(4000),

REVENUE CHAR(4000),

EXPENSES CHAR(4000),

PROFIT CHAR(4000),

GROWTH CHAR(4000)

)

)

LOCATION (‘P3-Future-500-The-Dataset.csv’)

)

REJECT LIMIT UNLIMITED;

select * from EXT_LOAD_STAGE WHERE ROWNUM <= 100;

INSERT INTO EXT_LOAD (ID, NAME, INDUSTRY, INCEPTION, EMPLOYEES, STATE, CITY, REVENUE, EXPENSES, PROFIT, GROWTH)

SELECT ID, NAME, INDUSTRY, INCEPTION, EMPLOYEES, STATE, CITY, REVENUE, EXPENSES, PROFIT, GROWTH FROM EXT_LOAD_STAGE ;

After that how can I find EXT_LOAD_STAGE, there is no such tables in my schema.

Hello Jeff, I’ve created a directory that points to a mounted network drive but getting the error below when executing a select on the external table (and I’m sure that the ext_file.csv exists in the directory). I’ve read that this may be due to Oracle not having OS permissions to the folder, but I’m able to import and export data files to the same folder just fine. I’m using Windows 7, Oracle 12.1.0, and SQL Developer 4.1.1.19. Any Suggestions?

SQL Error: ORA-29913: error in executing ODCIEXTTABLEOPEN callout

ORA-29400: data cartridge error

KUP-04040: file ext_file.csv in EXT_DATA_DIR2 not found

29913. 00000 – “error in executing %s callout”

*Cause: The execution of the specified callout caused an error.

*Action: Examine the error messages take appropriate action.

Thanks

the OS user that the db server processes are running as, ‘oracle’ needs Read/Write pris on your directory.

>>but I’m able to import and export data files to the same folder just fine

Import and Export how – using data pump and that same directory?

I’m not too familiar with the data pump feature…I was using the “Import Data” and “Export” wizards from the right-click menu in SQL Developer to import and export data. Are these permissions different? Also, this may be a Windows question but any idea how to find the name of the OS user? Hopefully I can get the network admin to add this user and resolve the problem.

Thanks

Where exactly is your database?

I have a local database set up on my laptop, and I’m trying to point the external table directory to our network drive.

Mmmmmmm, mapped a network drive? Your network user with privs to that directory needs to be the same user that runs your oracle on your laptop…easier to copy the files down to your laptop maybe.

I was trying to work around that since I don’t have much hard drive space but that’s another issue lol. Thanks Jeff

Never mind….. Here’s the answer:

PRIMARY_CARE_DATE_1 CHAR(4000)

DATE_FORMAT DATE MASK “YYYYDDD”

NULLIF (“PRIMARY_CARE_DATE_1” = “0000000”),

easy peasy!

BTW: If you’re trying to migrate from a SQL*Loader-based system to external tables, you can use the EXTERNAL_TABLE=GENERATE_ONLY option with SQL*Loader to generate external-table SQL code directly from your existing control file.

Hi Jeff,

I’m trying to follow your example using my own (very large) data files.

This may be out of the scope of SQL Developer, but how would you handle converting placeholder values in the CSV file into NULLs when importing via external tables?

SQL*Loader control files can include a NULLIF function, e.g.:

PRIMARY_CARE_DATE_1 DATE “YYYYDDD” NULLIF PRIMARY_CARE_DATE_1 = ‘0000000’

The external table’s definition picks up the ordinal date format, all right:

PRIMARY_CARE_DATE_1 CHAR(4000) date_format DATE mask “YYYYDDD”

But, is there similar functionality to NULLIF with external tables? Or would I need to adjust the INSERT… SELECT manually, as in you “small tweak” example above?

Thanks

Jeff,

The last line of your script refers to OPENDATA_TEST_STAGE3 — is that a typo?

Can you comment as to the speed of loads via external tables vs. SQL*Loader?

not a typo per se, i was running the scenario more than a few times

as to vs SQL*Loader. The major difference is that SQL*Loader is a client tool. So you’re running it over a network, usually. It’s connecting to the database, pushing the data over, etc.

External tables are all happening server side. It’s probably always going to be faster. And it’s MUCH more flexible. Once the external table is available, you can load the data to permanent tables as many different ways as you want.

Most folks I know refer to External Tables as ‘the new SQL*Loader.’ The only downside – it requires server side access.